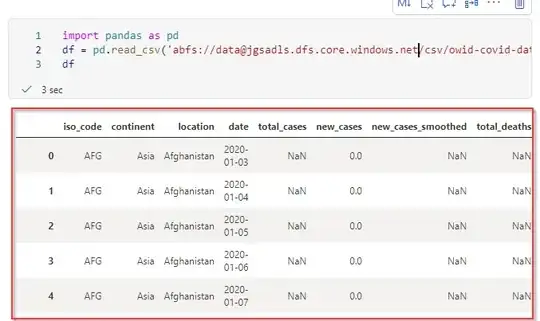

I created a datastore in Azure ML Studio that connects to an ADLS Gen2 storage account. I am wondering how can I read data from this datastore in my notebooks in Azure ML Studio? This is what I tried but I am not sure of what the syntax should be. Thanks

import pandas as pd

path = "azureml://datastores/[data_store_name]/paths/[path_name]/file.csv"

pd.read_csv("path")

Edit:

After adding sub, rg, and workspace, i am getting this error now.

----> 9 df = pd.read_csv(path)

File /anaconda/envs/azureml_py38/lib/python3.8/site-packages/pandas/io/parsers.py:688, in read_csv(filepath_or_buffer, sep, delimiter, header, names, index_col, usecols, squeeze, prefix, mangle_dupe_cols, dtype, engine, converters, true_values, false_values, skipinitialspace, skiprows, skipfooter, nrows, na_values, keep_default_na, na_filter, verbose, skip_blank_lines, parse_dates, infer_datetime_format, keep_date_col, date_parser, dayfirst, cache_dates, iterator, chunksize, compression, thousands, decimal, lineterminator, quotechar, quoting, doublequote, escapechar, comment, encoding, dialect, error_bad_lines, warn_bad_lines, delim_whitespace, low_memory, memory_map, float_precision)

635 engine_specified = False

637 kwds.update(

638 delimiter=delimiter,

639 engine=engine,

(...)

685 skip_blank_lines=skip_blank_lines,

686 )

--> 688 return _read(filepath_or_buffer, kwds)

File /anaconda/envs/azureml_py38/lib/python3.8/site-packages/pandas/io/parsers.py:436, in _read(filepath_or_buffer, kwds)

430 compression = infer_compression(filepath_or_buffer, compression)

432 # TODO: get_filepath_or_buffer could return

433 # Union[FilePathOrBuffer, s3fs.S3File, gcsfs.GCSFile]

434 # though mypy handling of conditional imports is difficult.

435 # See https://github.com/python/mypy/issues/1297

--> 436 fp_or_buf, _, compression, should_close = get_filepath_or_buffer(

437 filepath_or_buffer, encoding, compression

438 )

439 kwds["compression"] = compression

441 if kwds.get("date_parser", None) is not None:

File /anaconda/envs/azureml_py38/lib/python3.8/site-packages/pandas/io/common.py:221, in get_filepath_or_buffer(filepath_or_buffer, encoding, compression, mode, storage_options)

218 pass

220 try:

--> 221 file_obj = fsspec.open(

222 filepath_or_buffer, mode=mode or "rb", **(storage_options or {})

223 ).open()

224 # GH 34626 Reads from Public Buckets without Credentials needs anon=True

225 except tuple(err_types_to_retry_with_anon):

File /anaconda/envs/azureml_py38/lib/python3.8/site-packages/fsspec/core.py:419, in open(urlpath, mode, compression, encoding, errors, protocol, newline, **kwargs)

369 def open(

370 urlpath,

371 mode="rb",

(...)

377 **kwargs,

378 ):

379 """Given a path or paths, return one ``OpenFile`` object.

380

381 Parameters

(...)

417 ``OpenFile`` object.

418 """

--> 419 return open_files(

420 urlpath=[urlpath],

421 mode=mode,

422 compression=compression,

423 encoding=encoding,

424 errors=errors,

425 protocol=protocol,

426 newline=newline,

427 expand=False,

428 **kwargs,

429 )[0]

File /anaconda/envs/azureml_py38/lib/python3.8/site-packages/fsspec/core.py:272, in open_files(urlpath, mode, compression, encoding, errors, name_function, num, protocol, newline, auto_mkdir, expand, **kwargs)

203 def open_files(

204 urlpath,

205 mode="rb",

(...)

215 **kwargs,

216 ):

217 """Given a path or paths, return a list of ``OpenFile`` objects.

218

219 For writing, a str path must contain the "*" character, which will be filled

(...)

270 be used as a single context

271 """

--> 272 fs, fs_token, paths = get_fs_token_paths(

273 urlpath,

274 mode,

275 num=num,

276 name_function=name_function,

277 storage_options=kwargs,

278 protocol=protocol,

279 expand=expand,

280 )

281 if "r" not in mode and auto_mkdir:

282 parents = {fs._parent(path) for path in paths}

File /anaconda/envs/azureml_py38/lib/python3.8/site-packages/fsspec/core.py:574, in get_fs_token_paths(urlpath, mode, num, name_function, storage_options, protocol, expand)

572 if protocol:

573 storage_options["protocol"] = protocol

--> 574 chain = _un_chain(urlpath0, storage_options or {})

575 inkwargs = {}

576 # Reverse iterate the chain, creating a nested target_* structure

File /anaconda/envs/azureml_py38/lib/python3.8/site-packages/fsspec/core.py:315, in _un_chain(path, kwargs)

313 for bit in reversed(bits):

314 protocol = kwargs.pop("protocol", None) or split_protocol(bit)[0] or "file"

--> 315 cls = get_filesystem_class(protocol)

316 extra_kwargs = cls._get_kwargs_from_urls(bit)

317 kws = kwargs.pop(protocol, {})

File /anaconda/envs/azureml_py38/lib/python3.8/site-packages/fsspec/registry.py:208, in get_filesystem_class(protocol)

206 if protocol not in registry:

207 if protocol not in known_implementations:

--> 208 raise ValueError("Protocol not known: %s" % protocol)

209 bit = known_implementations[protocol]

210 try:

ValueError: Protocol not known: azureml