I'm beginner with airflow and spark and I am currently setting up a data pipeline locally using Airflow and Spark. The DAG I want to do has just one task that runs a pyspark job on Spark.

The dags folder of my application contains two files:

dag-airflow-spark-submitop.py :

import airflow

from datetime import datetime, timedelta

from airflow.contrib.operators.spark_submit_operator import SparkSubmitOperator

default_args = {

'owner': 'moi',

'depends_on_past': True,

'retries': 5,

'retry_delay': timedelta(minutes=30),

'start_date': datetime(year=2023, month=7, day=9),

}

with airflow.DAG('dag_teste_spark_connection', default_args=default_args, schedule_interval='0 1 * * *') as dag:

task_elt_documento_pagar = SparkSubmitOperator(

task_id='task_run_pysparkjob',

conn_id='spark',

application="./dags/sparkjob.py",

)

sparkjob.py :

from pyspark.sql import SparkSession

spark = SparkSession.builder \

.appName("test") \

.getOrCreate()

data = [("Java", "20000"), ("Python", "100000"), ("Scala", "3000")]

df = spark.createDataFrame(data)

df.show()

So the first step it's to run my Spark service (master and worker), in the localhost:8080 like that :

In the UI we can see my DAG "dag_teste_spark_connection".

And here my Spark connection :

The problem is that when I run the DAG I have a success in the UI but I can't see the job done in Spark UI :

As you can see in this screeshot, I don't have Completed Applications :

here are the details of my task after I clicked the toggle button :

And here the task logs :

*** Found local files:

*** * /opt/airflow/logs/dag_id=dag_teste_spark_connection/run_id=manual__2023-07-09T14:53:27.424066+00:00/task_id=task_run_pysparkjob/attempt=1.log

[2023-07-09, 14:53:37 UTC] {taskinstance.py:1103} INFO - Dependencies all met for dep_context=non-requeueable deps ti=<TaskInstance: dag_teste_spark_connection.task_run_pysparkjob manual__2023-07-09T14:53:27.424066+00:00 [queued]>

[2023-07-09, 14:53:37 UTC] {taskinstance.py:1103} INFO - Dependencies all met for dep_context=requeueable deps ti=<TaskInstance: dag_teste_spark_connection.task_run_pysparkjob manual__2023-07-09T14:53:27.424066+00:00 [queued]>

[2023-07-09, 14:53:37 UTC] {taskinstance.py:1308} INFO - Starting attempt 1 of 6

[2023-07-09, 14:53:37 UTC] {taskinstance.py:1327} INFO - Executing <Task(SparkSubmitOperator): task_run_pysparkjob> on 2023-07-09 14:53:27.424066+00:00

[2023-07-09, 14:53:37 UTC] {standard_task_runner.py:57} INFO - Started process 278 to run task

[2023-07-09, 14:53:37 UTC] {standard_task_runner.py:84} INFO - Running: ['***', 'tasks', 'run', 'dag_teste_spark_connection', 'task_run_pysparkjob', 'manual__2023-07-09T14:53:27.424066+00:00', '--job-id', '98', '--raw', '--subdir', 'DAGS_FOLDER/dag-***-spark-submitop.py', '--cfg-path', '/tmp/tmpuzlf2_yf']

[2023-07-09, 14:53:37 UTC] {standard_task_runner.py:85} INFO - Job 98: Subtask task_run_pysparkjob

[2023-07-09, 14:53:37 UTC] {task_command.py:410} INFO - Running <TaskInstance: dag_teste_spark_connection.task_run_pysparkjob manual__2023-07-09T14:53:27.424066+00:00 [running]> on host ef29c1050b71

[2023-07-09, 14:53:37 UTC] {taskinstance.py:1547} INFO - Exporting env vars: AIRFLOW_CTX_DAG_OWNER='moi' AIRFLOW_CTX_DAG_ID='dag_teste_spark_connection' AIRFLOW_CTX_TASK_ID='task_run_pysparkjob' AIRFLOW_CTX_EXECUTION_DATE='2023-07-09T14:53:27.424066+00:00' AIRFLOW_CTX_TRY_NUMBER='1' AIRFLOW_CTX_DAG_RUN_ID='manual__2023-07-09T14:53:27.424066+00:00'

[2023-07-09, 14:53:37 UTC] {base.py:73} INFO - Using connection ID 'spark' for task execution.

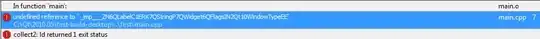

[2023-07-09, 14:53:37 UTC] {spark_submit.py:339} INFO - Spark-Submit cmd: spark-submit --master spark://MacBook-Pro-de-AdminSensei-9.local:7077:7077 --name arrow-spark ./dags/sparkjob.py

[2023-07-09, 14:53:38 UTC] {spark_submit.py:490} INFO - /home/***/.local/lib/python3.7/site-packages/pyspark/bin/load-spark-env.sh: line 68: ps: command not found

[2023-07-09, 14:53:38 UTC] {spark_submit.py:490} INFO - JAVA_HOME is not set

[2023-07-09, 14:53:38 UTC] {taskinstance.py:1824} ERROR - Task failed with exception

Traceback (most recent call last):

File "/home/airflow/.local/lib/python3.7/site-packages/airflow/providers/apache/spark/operators/spark_submit.py", line 157, in execute

self._hook.submit(self._application)

File "/home/airflow/.local/lib/python3.7/site-packages/airflow/providers/apache/spark/hooks/spark_submit.py", line 422, in submit

f"Cannot execute: {self._mask_cmd(spark_submit_cmd)}. Error code is: {returncode}."

airflow.exceptions.AirflowException: Cannot execute: spark-submit --master spark://MacBook-Pro-de-AdminSensei-9.local:7077:7077 --name arrow-spark ./dags/sparkjob.py. Error code is: 1.

[2023-07-09, 14:53:38 UTC] {taskinstance.py:1350} INFO - Marking task as UP_FOR_RETRY. dag_id=dag_teste_spark_connection, task_id=task_run_pysparkjob, execution_date=20230709T145327, start_date=20230709T145337, end_date=20230709T145338

[2023-07-09, 14:53:38 UTC] {standard_task_runner.py:109} ERROR - Failed to execute job 98 for task task_run_pysparkjob (Cannot execute: spark-submit --master spark://MacBook-Pro-de-AdminSensei-9.local:7077:7077 --name arrow-spark ./dags/sparkjob.py. Error code is: 1.; 278)

[2023-07-09, 14:53:38 UTC] {local_task_job_runner.py:225} INFO - Task exited with return code 1

[2023-07-09, 14:53:38 UTC] {taskinstance.py:2651} INFO - 0 downstream tasks scheduled from follow-on schedule check

Can you help me please ?