I am trying to get the locations of options in some images of questions using OpenCV matchTemplate. I tried using OCR with bounding boxes but it nearly takes 10 seconds to compute, so I decided to try matchTemplate. It was a lot faster but not very accurate.

Here are my images and my code

const cv = require('@u4/opencv4nodejs');

const fs = require('fs');

const color = new cv.Vec3(255,255,255);

async function opencvGetPositions(imageData,path,answers){

const mat = cv.imdecode(imageData);

let modifiedMat = mat.cvtColor(cv.COLOR_RGB2GRAY);

modifiedMat = modifiedMat.threshold(0,255,cv.THRESH_OTSU);

modifiedMat = modifiedMat.bitwiseNot();

//answers is an array of cv.mat converted to grayscale

for(let i = 0; i < answers.length;i++){

const ww = answers[i].sizes[1];

const hh = answers[i].sizes[0];

const matched = modifiedMat.matchTemplate(answers[i],cv.TM_SQDIFF);

const loc = matched.minMaxLoc().minLoc;

const xx = loc.x;

const yy = loc.y;

const pt1 = new cv.Point(xx,yy);

const pt2 = new cv.Point(xx+ww, yy+hh);

modifiedMat.drawRectangle(pt1,pt2,color,2);

}

cv.imwrite(path + '/output.png', modifiedMat);

}

I'm using nodejs and @u4/opencv4nodejs package

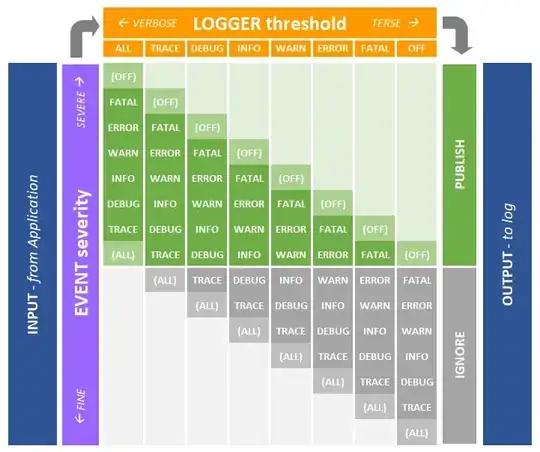

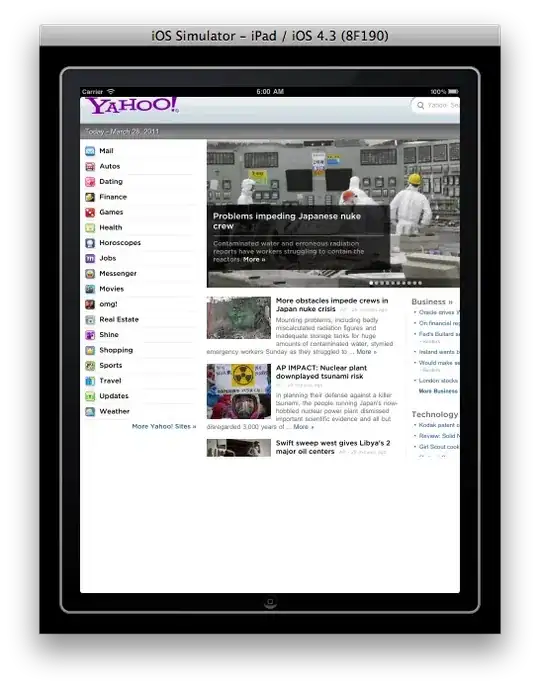

Answers array consists of these images:

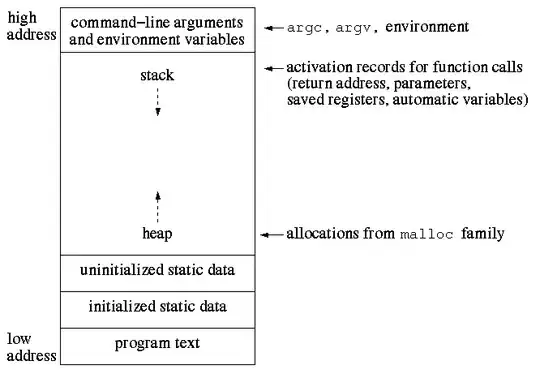

Applied to:

This image is mostly accurate probably because I cropped the options from this one, I guess there are slight differences on each questions options so maybe that causes the inaccuracy.

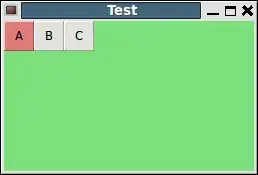

But most of the images are very inaccurate like this one.

So is there a better way to do this or someway to make the matchTemplate function more accurate?

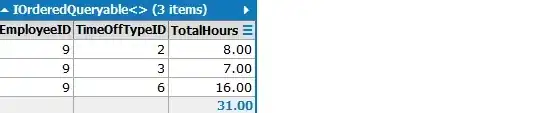

And here are the images without any modifications: