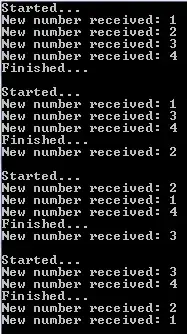

I am ingesting data from RAW layer (ADLS gen2) to Bronze layer with databricks using Autoloader. These are not real time data but batch data and everyday we get new files in the raw path which comes via adf. Now for one of the dataset i am doing a historical data load (2014-2023) for initial load and then onwards it's incremental and in bronze and silver the load type will be append. while ingesting to Bronze in the dataframe i am adding an extra column as "lh_load_date" which is basically tells the load_date_time . this column helps to understand when was the data loaded in the lakehouse. Because of the data volume (around 1176 Million) in bronze data is getting ingested in multiple batch as a result the column "lh_load_date" is having different time. i am expecting it to be same across all the records for the initial load. The below is the example of how i am writing it in bronze.

And this is the example of the "lh_load_date" value.

as you can see there is a interval of 2 hrs. Now the problem is in silver i am droping duplicates based on all the columns including "lh_laod_date" but as shown in the above example the time is differet, these two records are not being considered as duplicates.

can anyone suggest how to avoid this so that in "lh_load_date" i get same load_date_time across all the rows even if the data volume is too large.