I have implemented an amqplib consumer that is experiencing some issues related to memory overconsumption.

I'm unsure whether to classify it as a memory leak since, on one hand, the process seems to stabilize at a lower memory level, but on the other hand, it never returns to the initial level.

The amqplib consumer performs the following steps:

- It fetches messages from a RabbitMQ server, and these messages contain a file path.

- It downloads the file from the specified path using buffer streams. At this point, the buffer object in the consumer holds the entire file data.

- It then uploads the file to Amazon S3 using the AWS SDK library.

I have already exposed and executed the Garbage Collector, which has significantly improved memory usage by cleaning up numerous dead objects. However, if I attempt to download/upload a large file, the memory decreases but still remains significantly high.

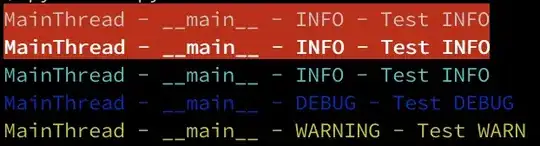

Using the GC, the profile goes from this:

Now I've also tried closing all stream objects, finding the buffers and setting them to null at the end of every upload, still, the GC does not go as far as bringing MEM use low enough.

Short of restarting the process every now and then, what are the possible mitigation strategies I can implement ?