I have a node TCP server which is also connected to a redis server, and the clients are devices that are connected via mobile network inside cars (trackers) Everytime a device connects it sends its imei and a "seen_at" variable for that imei is pushed to the redis. Then the device sends data and this data is converted to a Laravel job and is also pushed to the redis.

The problem is that sometimes, the clients send TCP SYN packets that the server ignores for some reason. It causes the device to ask SYN again until the servers finally answers. (This has been proven, a tcpdump of the production server was recorded and we can actually see that some devices send SYN packets that the server never replies to)

It creates latency for the tracking purpose, some data is received 5 min after it was sent, and it can go up to several hours.

I'm not a network expert, and im using node's base network config. Here's the server/socket config :

import * as net from 'net'

import * as netKeepAlive from 'net-keepalive'

import * as stoppable from 'stoppable'

import { v4 as uuidv4 } from 'uuid'

import { AsyncSubject, of, Subscription } from 'rxjs'

import { concatMap, delay, takeUntil } from 'rxjs/operators'

import { env } from '../utils'; // using dotenv

const sockets: Record<string, TcpProtocol> = {}

let interval: NodeJS.Timer

const TCP_KEEPIDLE = 3 * 60 * 1000 // The time (milliseconds) the connection needs to remain idle before TCP starts sending keepalive probes

const TCP_KEEPINTVL = 30 * 1000 // The time (milliseconds) between individual keepalive probes

const TCP_KEEPCNT = 3 // The maximum number of keepalive probes TCP should send before dropping the connection.

export abstract class TcpProtocol {

protected _imei: string = null

protected id: string = null

protected device: Device

protected log: Log // Custom logging class, logs once every second at max, adds color and filters log levels displayed

private static _NET_GRACE: number = null;

static get NET_GRACE(): number

{

if (Protocol._NET_GRACE === null)

{

Protocol._NET_GRACE = parseInt(env('NET_GRACE', Infinity), 10);

}

return Protocol._NET_GRACE;

}

protected get imei() {

return this._imei

}

protected set imei(imei) {

this._imei = imei

this.log.prefix = `@${this.socket.remotePort} | ${this.imei}: `

this.device = new Device(imei, this.log)

this.device.seen().then(() => this.log.debug('Device seen')).catch((err) => this.log.error('device not seen (imei)', err))

}

constructor(protected socket: Socket) {

}

static startServer(port: number) {

const logTag = this.name.toUpperCase()

const log = new Log(logTag)

interval = setInterval(() => log.debug(`Alive sockets: ${Object.keys(sockets).length}`), 1000 * 10)

const server = stoppable(

net.createServer((socket) => {

socket.setNoDelay(true)

socket.setKeepAlive(true, TCP_KEEPIDLE)

netKeepAlive.setKeepAliveInterval(socket, TCP_KEEPINTVL)

netKeepAlive.setKeepAliveProbes(socket, TCP_KEEPCNT)

// @ts-ignore

const listener = new this(socket)

listener.log = new Log(logTag)

listener.onConnect()

socket.on('data', listener._onNewPacket.bind(listener))

socket.on('close', listener.onClose.bind(listener))

socket.on('timeout', listener.onTimeout.bind(listener))

socket.on('error', listener.onError.bind(listener))

socket.on('end', listener.onEnd.bind(listener))

socket.on('drain', () => listener.log.info('drain'))

socket.on('lookup', () => listener.log.info('lookup'))

socket.on('ready', () => listener.log.info('ready'))

socket.on('connect', () => listener.log.info('connect'))

}),

this.NET_GRACE

)

server.on('error', (err) => log.error('Server error', err))

server.on('connection', (socket) =>

log.debug(`New connection from ${socket.remoteAddress}:${socket.remotePort}`)

)

server.on('close', () => log.info('Server closed'))

server.on('listening', () => log.info(`Server is listening on port ${port}`))

server.on('drop', () => log.info('Server dropped'))

server.listen(port, '0.0.0.0')

return server

}

private _onNewPacket(data: Buffer) {

try {

this.log.trace(`Incoming data (size: ${data.length}) >>> ${data.toString('hex')}`)

this.onNewPacket(data)

if (this.device) {

this.device.seen().then(() => this.log.debug('Device seen')).catch((err) => this.log.error('device not seen (data)', err))

}

} catch (ex) {

this.log.error('Uncaught error in listener', ex)

Sentry.captureException(ex)

}

}

onConnect() {

this.id = uuidv4()

sockets[this.id] = this

this.log.prefix = `@${this.socket.remotePort}: `

this.log.trace(`Client connected (${this.socket.remoteAddress}:${this.socket.remotePort})`)

}

onEnd() {

this.log.trace('Client disconnected (end)')

}

onClose(hadError) {

this.log.trace(`Client disconnected (close) ${hadError ? 'with error' : ''}`)

this.disconnectDevice()

}

onError(err) {

if (err.errno === 'ETIMEDOUT') {

this.onTimeout()

return

}

this.log.error('Client disconnected (error)', err)

this.disconnectDevice()

this.socket.destroy()

}

onTimeout() {

this.log.warn('Client disconnected (timeout)')

this.disconnectDevice()

this.socket.end()

}

protected writeSocket(buffer: Buffer): boolean {

try {

this.log.trace(`<<< ${buffer.toString('hex')}`)

} catch (e) {

this.log.trace(`<<< ???`)

}

return this.socket.write(buffer)

}

private disconnectDevice() {

this.disconnected$.next()

this.disconnected$.complete()

this.removeCommandListener()

if (this.device) {

this.device.disconnect()

this.device = null

}

delete sockets[this.id]

}

private async onCommand() {

const command = await this.device.popCommand()

if (!command) {

return

}

try {

this.log.debug('Execute command')

if (!this.executeCommand(command)) {

throw new Error('TcpProtocol.executeCommand returns false')

}

command.markAsRead()

} catch (ex) {

this.log.error('Command execution failed', ex)

if (command.attempts >= 3) {

command.fail()

} else {

command.release()

}

}

}

// region --- Command listener

async registerCommandListener() {

this.removeCommandListener()

this.commandSubscription = this.device

.getCommandListener()

.pipe(concatMap((imei) => of(imei).pipe(delay(1000))))

.pipe(takeUntil(this.disconnected$))

.subscribe(this.onCommand.bind(this))

this.device.getCommands()

this.log.trace('Command listener registered')

}

removeCommandListener() {

if (this.commandSubscription) {

this.log.trace('Command listener removed')

this.commandSubscription.unsubscribe()

this.commandSubscription = null

}

}

// endregion

abstract executeCommand(command: Command)

abstract onNewPacket(data: Buffer)

Current implementation :

export class Teltonika extends TcpProtocol {

protected previousData: Buffer

constructor(socket: Socket) {

super(socket)

}

// .....

onNewPacket(data: Buffer) {

if (this.previousData) {

data = Buffer.concat([this.previousData, data])

}

this.previousData = null

const reader = new Reader(data)

if (this.imei === null) {

this.decodeIMEI(reader) // setting this.imei

this.registerCommandListener()

} else {

this.decodeAVL(reader) // sending data through redis

}

}

executeCommand(command) {

const buffer = Teltonika.createCodec12Request(command.content) // internal function, no need here.

return this.writeSocket(Buffer.from(buffer))

}

// ....

}

Though i can't see any error causing the server to ignore messages.

There are many ETIMEDOUT errors in production. Those are maybe related. For example I got this log :

2023-07-06 10:21:16.663566137 +0200 CEST [tcp-1] [E] @5203 | (imei redacted): Client disconnected (error) Error: read ETIMEDOUT

When I search for this IMEI in our production base, I can clearly see that its last message was sent at 10:16:29 and received at 10:16:41. It timed out at 10:21:16 so it means that the keepalive packet was not answered, or maybe not acknowledged by the server. The current configuration sends the first keepalive packet at 3 minutes + 3 probes every 30s. It gives a total timeout duration of 4min30. 10:21:16 - 00:04:30 == 10:16:46 and 10:16:46 is weirdly connected to the moment where the server received the message (10:16:41).

| IMEI | sent_at (device) | received_at (server) | timedout_at |

|---|---|---|---|

| (1) | 10:16:29 | 10:16:41 | 10:21:16 |

| (2) | 10:22:56 | 10:22:59 | 10:27:35 |

So, what happened ? Why devices mostly timeout after sending data ? The devices sent data after this date but is not received yet.

For the second device, here are the packets timestamps (from newest to oldest) Columns are sent_at (device) and received_at (server)

We can clearly see that after the message sent at 10:22:56, something happened and device apparently lost connection. Then when it reconnects, it burst all packets that haven't been received by the server (you can see the received_at is the same for packets sent between 10:22:56 and 10:27:07. But were actually received at 10:38:59. This is a huge problem.

I don't have control over the devices themselves. But I can configure them. Here's the configuration for these two devices :

| Open link timeout | Response timeout | Network ping timeout |

|---|---|---|

| 300s | 30s | 0s |

Some server logs to make some context:

Here's the analysis of a sys-admin:

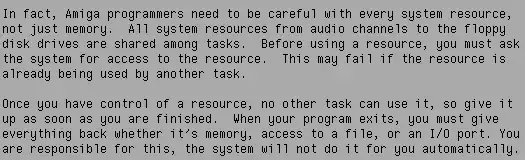

We tried to see if a device tried to reconnect during the "blackouts" by filtering traffic on the IP received before and after the disconnect window. This yielded interesting results. During the disconnect windows, the devices did sent SYN packets to try to reconnect but those were never answered.

Conclusion

During the disconnect period the device did try to re-establish the connection with the application. However the TCP SYN packets were never answered. This is the cause of the issue observed by the client.

The SYN packets are sent to and received by the client’s application container. But those packets were never ACK'ed by the client application. This usually happens when the server is not able to run the accept syscall fast enough.