I want to build a Pipeline in Azure ML. The Training pipeline runs well. Training:

training_pipeline_steps = AutoMLPipelineBuilder.get_many_models_train_steps(

experiment=experiment,

train_data=full_dataset,

compute_target=compute_target,

node_count=1,

process_count_per_node=4,

train_pipeline_parameters=hts_parameters,

run_invocation_timeout=3900,

)

Forecasting:

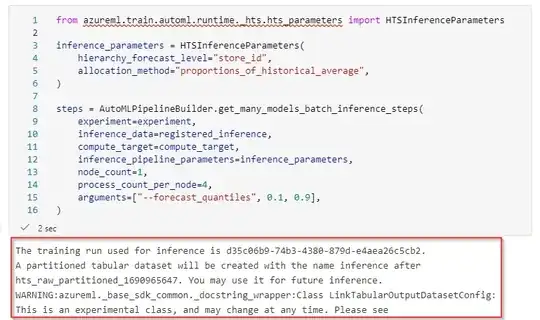

from azureml.train.automl.runtime._hts.hts_parameters import HTSInferenceParameters

inference_parameters = HTSInferenceParameters(

hierarchy_forecast_level="Material", # The setting is specific to this dataset and should be changed based on your dataset.

allocation_method="proportions_of_historical_average",

)

steps = AutoMLPipelineBuilder.get_many_models_batch_inference_steps(

experiment=experiment,

#inference_data=registered_inference,

inference_data = full_dataset,

compute_target=compute_target,

inference_pipeline_parameters=inference_parameters,

node_count=1,

process_count_per_node=4,

arguments=["--forecast_quantiles", 0.1, 0.9],

)

But I always get the error on Forecasting:

Can anybody help with that error? Thank you!