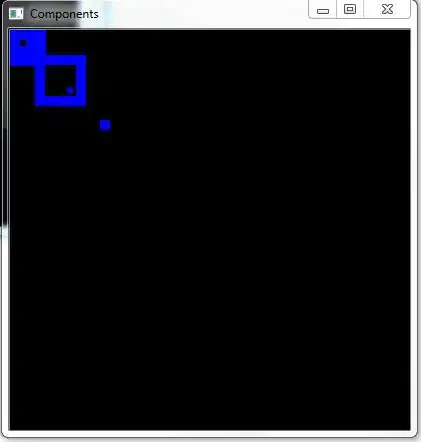

I have fetched this roll number section from the OMR sheet

Now, my main task is to recognize handwritten digits and return the roll number value as text in Python. I tried using pytesseract. But, it is not giving proper results.

Here is my sample code

import cv2

import pytesseract

def recognize_digit(image):

## Preprocess the image for OCR

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

clahe = cv2.createCLAHE(clipLimit=0.9, tileGridSize=(8, 8))

equalized = clahe.apply(gray)

threshold = cv2.threshold(equalized, 0, 255, cv2.THRESH_BINARY_INV + cv2.THRESH_OTSU)[1]

denoised = cv2.fastNlMeansDenoising(threshold, h=0)

# Remove horizontal

horizontal_kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (24,1))

detect_horizontal = cv2.morphologyEx(threshold, cv2.MORPH_OPEN, horizontal_kernel, iterations=2)

cnts = cv2.findContours(detect_horizontal, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

cnts = cnts[0] if len(cnts) == 2 else cnts[1]

for c in cnts:

cv2.drawContours(image, [c], -1, (255,255,255), 5)

cv2.drawContours(denoised, [c], -1, (0, 0, 0), 5)

cv2.drawContours(threshold, [c], -1, (0, 0, 0), 5)

# Remove vertical

vertical_kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (1,25))

detect_vertical = cv2.morphologyEx(threshold, cv2.MORPH_OPEN, vertical_kernel, iterations=2)

cnts = cv2.findContours(detect_vertical, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

cnts = cnts[0] if len(cnts) == 2 else cnts[1]

for c in cnts:

cv2.drawContours(image, [c], -1, (255,255,255), 5)

cv2.drawContours(denoised, [c], -1, (0, 0, 0), 5)

cv2.drawContours(threshold, [c], -1, (0, 0, 0), 5)

cv2.imshow('X', denoised)

cv2.waitKey(0)

cv2.destroyAllWindows()

# Apply OCR using Tesseract

config = "--psm 7 --oem 3 -c tessedit_char_whitelist=0123456789"

text = pytesseract.image_to_string(denoised, config=config)

return text.strip()

# Read the image

image_path = 'aligned_image.jpeg'

image = cv2.imread(image_path)

# Define the region of interest (coordinates of the desired region)

x, y, w, h = 4, 4, 800, 100

# Crop the image to the defined region

roi = image[y:y+h, x:x+w]

# Recognize the handwritten digit within the cropped region

recognized_digit = recognize_digit(roi)

# Print the recognized digit

print("Recognized digit:", recognized_digit)

How can get proper solution for this.