I have a databricks cluster on AWS, with minimum two nodes and maximum 8. Here's a picture of my cluster

I have cached a dataframe, and under SparkUI on storage tab I see it's 6.7 GB

So I would expect that if I go to ganglia's UI, I would see that I am using 6.7GB of memory on the cluster (maybe a bit more or less for metadata and other stuff).

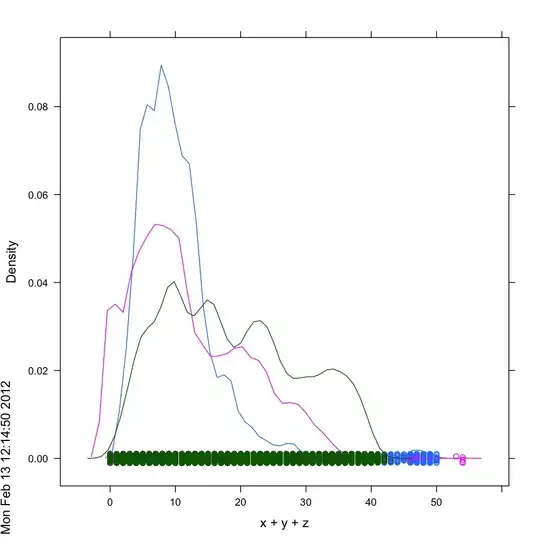

But when I open ganglia's UI, I see this

Here is a close up of the graph on memory usage of the cluster:

So I don't understand how the 6.7GB that spark's UI says I have cached, vs what this graph is telling me.

The graph says I have 22GB in use (from what???) and that there's a "cache" on 3.2GB which is not near the 6.7GB of my cached dataframe... So where are the 6.7GB? are they inside the 22GB? And if so, what's all the rest?

Therefore, my main question is: How does spark's cached dataframes fall into ganglia's cluster memory usage graph? In the simplest world I would have expected ganglia to say "Here are cached dataframes of 6.7GB)"

And a couple of questions more:

You can see from ganglia I have three nodes, but if you check the cluster definition image, it says currently there's only two. I have checked again to see both images are captured at the exact same time and they do. So, why three instances when the cluster says it's using 2? I have checked AWS EC2 list and there's indeed 3.

Finally, you can also see from the cluster definition image that my instances have each 30GB RAM. But on ganglia's UI it says the total memory is 74.7GB. How so if each instance is 30GB? Shouldn't it be in multiples of 30GB as per how many nodes there are in a moment? Also same question about CPUs... Ganglia on the top left corner says 52 CPUs, while also displaying it has only 3 nodes. But each node by the cluster definition image says each instance has 4 CPUs (maybe it's a virtual vs physical CPU's thing? )

So to summarize, I have four questions:

- How Spark's UI report on cached data (6.7GB) matches ganglia's memory report

- Why ganglia's says 3 nodes (and AWS also has 3 EC2s) when the cluster says it currently has 2?

- Why ganglia's cluster memory says 74.7GB when each instance on the cluster has 30GB?

- Why ganglia's dashboard says my cluster has 52 CPUs, while displaying 3 nodes and each node having 4 CPUs as per the cluster definition image.