So, this is my current implementation, you can check the following images for understanding the problem I'm facing...

/**

*

* @param {import("express").Request} req

* @param {import("express").Response} res

*/

async function urls_post(req, res) {

const { id: guild } = req.params;

/** @type {{ urls?: string[] }} */

const { urls } = req.body;

if (!urls?.length) return res.status(400).json({ success: false, message: "Please provide URLs to crawl" });

const app = new URLApp(guild);

const invalidURLs = [];

urls.forEach((u) => {

try {

new URL(u);

} catch (_) {

invalidURLs.push(u);

}

});

if (invalidURLs.length)

return res

.status(400)

.json({ success: false, message: "One or many of the URLs is/are not valid", invalidURLs, urls });

await write(res, { acknowledge: true });

await app.resetURLs().catch(console.error);

const guildData = await GuildModel.findOne({ guild_id: guild });

if (!guildData) return res.end(JSON.stringify({ success: false, message: "Guild is not initiated..!" }));

guildData.urls = urls;

await guildData.save();

const urlProcessGenerator = app.processDocuments(...urls);

for await (const response of urlProcessGenerator) await write(res, response);

res.end(JSON.stringify({ success: true, urls }));

}

/**

* @param {import("express").Response} res

* @param {unknown} data

*/

function write(res, data) {

if (!res.write(JSON.stringify(data) + ",")) return new Promise((r) => res.once("drain", r));

else return new Promise((r) => process.nextTick(r));

}

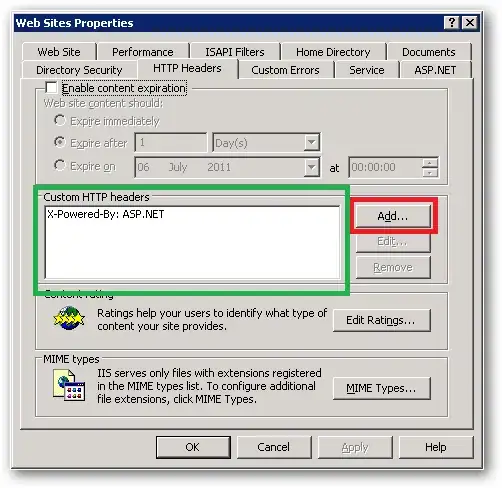

This is what I get in production (cloudflare proxied)

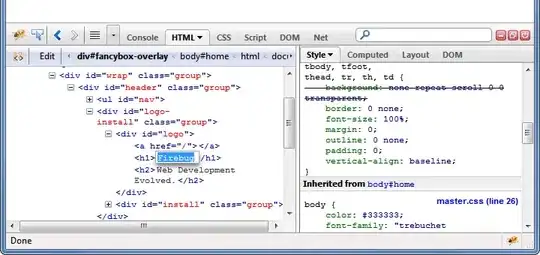

This is what I want.

I'm not sure why cloudflare is waiting for the whole request to finish to send the chunk?? This is important because we use this for a probress bar, since this is being resolved as a single chunk. The progress bar basically waits for some time at 0% and shoots to 100% in a ms. Feel free to ask for further context if necessary...