*Update: I modified the code after the suggestions of @jlandercy. Further, the code now solves a differential equation shown below to optimize the flow of my product gas. Sample data is updated.

I am trying to fit a two-site Langmuir Hinshelwood model which I derived, (basically, rate as a function of pressures of reactants and products) to my experimental data. The model converges, but changing the initial parameters can make a stark difference. The problem is those parameters matter for my analysis. Since I am comparing different models, the model vs experiment % fit depends on these parameters (K1, K2 ...).

I wanted to know if there is any other method to check that a particular set of parameters are better than the others (obviously a particular combination will give a slightly better-fit percentage, but that, in my opinion, may not the deciding criteria). The MSE landscape calculation, as suggested by @jlandercy, has been useful in understanding the convexity relationship between different parameters, however, I could not extract more information from them to move ahead and fix my K parameters.

P.S.: The other models are way simpler, in terms of mathematics, than this model. So, if I this is well understood, I should be able to compare the models with decent parameter accuracy. Thanks in advance for the help!

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from scipy.optimize import least_squares

# Step 1: Read experimental data

data1 = pd.read_excel("Local address of file")

# Extract the partial pressures (A, B, C) and the reaction rate

pp = data1.iloc[:49, 2:5].values

TT = data1.iloc[:49, 16].values

rr = data1.iloc[:49, 17].values

F0 = data1.iloc[:49, 5:10].values

W = data1.iloc[:49, 15].values

FF = data1.iloc[:49, 10:15].values

data = np.hstack((pp, F0, FF, W.reshape(-1,1), TT.reshape(-1,1)))

#-----------------------------------------------------------------------------

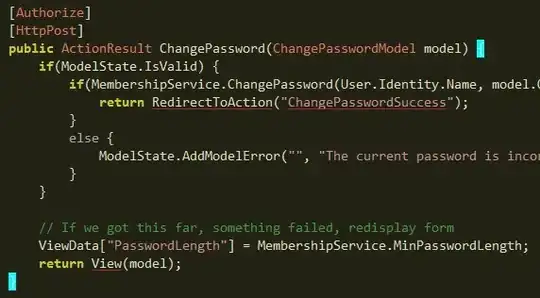

class Kinetic:

"""Langmuir-Hinshelwood Two-Site Kinetic Model Solver"""

R = 8.314 # J/mol.K

Ea = 45000 # J/mol

theta_g = [0.9, 0.1] # 1

w = np.linspace(0,1,100)

@staticmethod

def k3(flow, data, K1, K2, k4, K5, k0):

"""Arrhenius estimation for k3"""

pA, pB, pC, F0_a, F0_b, F0_c, F0_ar, F0_h, FF_a, FF_b, FF_c, FF_ar, FF_h, mg, T = data

return k0 * np.exp(-Kinetic.Ea / (Kinetic.R * T))

@staticmethod

def s(flow, data, K1, K2, k4, K5, k0):

"""Partial kinetic rate"""

pA, pB, pC, F0_a, F0_b, F0_c, F0_ar, F0_h, FF_a, FF_b, FF_c, FF_ar, FF_h, mg, T = data

#Calculating gas pressures at every position of PFR reactor

pA_t = flow[0] / np.sum(flow)

pB_t = flow[1] / np.sum(flow)

pC_t = flow[2] / np.sum(flow)

k3 = Kinetic.k3(flow, data, K1, K2, k4, K5, k0)

return (K1 * K2 * k3 / k4) * pA_t * pB_t

@staticmethod

def equation(x, y, C, s):

return 1/(1 + C + np.sqrt(s*y/x)) - x

@staticmethod

def system(theta, flow, data, K1, K2, k4, K5, k0):

"""Isothermal coverages system"""

pA, pB, pC, F0_a, F0_b, F0_c, F0_ar, F0_h, FF_a, FF_b, FF_c, FF_ar, FF_h, mg, T = data

t0, t1 = theta

s = Kinetic.s(flow, data, K1, K2, k4, K5, k0)

pA_t = flow[0] / np.sum(flow)

pB_t = flow[1] / np.sum(flow)

pC_t = flow[2] / np.sum(flow)

C0 = K2 * pB_t

C1 = K1 * pA_t + pC_t / K5

return np.array([

Kinetic.equation(t0, t1, C0, s),

Kinetic.equation(t1, t0, C1, s),

])

@staticmethod

def _theta(flow, data, K1, K2, k4, K5, k0):

"""Single Isothermal coverages"""

solution = fsolve(

Kinetic.system,

Kinetic.theta_g,

args=(flow, data, K1, K2, k4, K5, k0),

full_output=False

)

if not(all((solution >= 0) & (solution <= 1.0))):

raise ValueError("Theta are not constrained to domain: %s" % solution)

return solution

@staticmethod

def theta(flow, data, k1, k2, k4, k5, k0):

"""Isothermal coverages"""

return np.apply_along_axis(Kinetic._theta, 0, flow, data, k1, k2, k4, k5, k0)

@staticmethod

def r1(flow, w, data, K1, K2, k4, K5, k0):

"""Global kinetic rate"""

pA, pB, pC, F0_a, F0_b, F0_c, F0_ar, F0_h, FF_a, FF_b, FF_c, FF_ar, FF_h, mg, T = data

pA_t = flow[0] / np.sum(flow)

pB_t = flow[1] / np.sum(flow)

pC_t = flow[2] / np.sum(flow)

#print(pA)

k3 = Kinetic.k3(flow, data, K1, K2, k4, K5, k0)

t0, t1 = Kinetic.theta(flow, data, K1, K2, k4, K5, k0)

v=np.array([-1,-1, 1, 0, 0])

#v = v[:, np.newaxis] # Convert v to a 2D array with shape (5, 1)

return ((K1 * K2 * k3) * pA_t * pB_t * t0 * t1) * v

@staticmethod

def dFdm(data, K1, K2, k4, K5, k0):

"""Global kinetic rate"""

residual =[]

sq=[]

for i in data:

pA, pB, pC, F0_a, F0_b, F0_c, F0_ar, F0_h, FF_a, FF_b, FF_c, FF_ar, FF_h, mg, T = i

F0 = np.array([F0_a, F0_b, F0_c, F0_ar, F0_h])

FF = np.array([FF_a, FF_b, FF_c, FF_ar, FF_h]) # Final Experimental flows

flow = odeint(Kinetic.r1, F0, Kinetic.w, args = (i, K1, K2, k4, K5, k0))

flow_pred = flow[-1,2] # Predicted final model flows

res = (flow_pred - FF_c)*1000

residual.append(res)

return residual

bounds=((0, 0, 0, 0, 0), (np.inf, np.inf, np.inf, np.inf, np.inf))

initial_guesses = [8, 8, 50, 16, 6]

result = least_squares(lambda k: Kinetic.dFdm(data, *k), x0=initial_guesses, bounds=bounds, ftol=1e-14)

K1_fit, K2_fit, k4_fit, K5_fit, k0_fit = result.x

print(K1_fit, K2_fit, k4_fit, K5_fit, k0_fit)