import torch

from transformers import AutoTokenizer, AutoModelForCausalLM

import os

os.environ["PYTORCH_CUDA_ALLOC_CONF"] = "max_split_size_mb:32"

os.environ['CUDA_VISIBLE_DEVICES'] = '0,1,2,3,4,5,6,7'

device = torch.device('cuda')

tokenizer = AutoTokenizer.from_pretrained("/data/ygmeng/xuanyuan",trust_remote_code=True)

model = AutoModelForCausalLM.from_pretrained("/data/ygmeng/xuanyuan",trust_remote_code=True, device_map="auto")

with torch.no_grad():

# gpus=[0,1,2,3,4,5,6,7]

# model = torch.nn.DataParallel(model.to(device), device_ids=gpus, output_device=gpus[0])

# model.module.to(device)

model = model.eval()

inputs=tokenizer("<s>" + "Human: " + "Who are you?" + "\n\nAssistant: ",return_tensors='pt')

output = model.generate(**inputs, do_sample=True, temperature=0.8, top_k=50, top_p=0.9, early_stopping=True, repetition_penalty=1.1, min_new_tokens=1, max_new_tokens=256)

print(tokenizer.batch_decode(output,skip_special_tokens=True))

Sometimes this happens to AutoModelForCausalLM.from_pretrained:

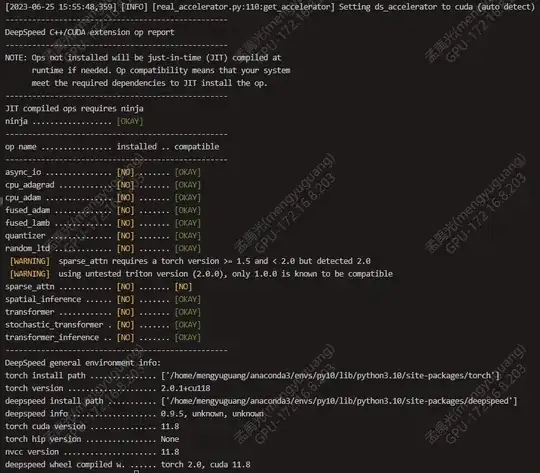

[2023-06-25 16:14:34,031] [INFO] [real_accelerator.py:110:get_accelerator] Setting ds_accelerator to cuda (auto detect)

new_value = value.to(device)

torch.cuda.OutOfMemoryError: CUDA out of memory. Tried to allocate 13.40 GiB (GPU 0; 79.15 GiB total capacity; 68.53 GiB already allocated; 9.66 GiB free; 68.53 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF

When the model is loaded correctly:

You are calling .generate() with the input_ids being on a device type different than your model's device. input_ids is on cuda, whereas the model is on cpu. You may experience unexpected behaviors or slower generation. Please make sure that you have put input_ids to the correct device by calling for example input_ids = input_ids.to('cpu') before running .generate().

I wanted to model.to(device) but no enough memory.So I ran inputs.to("cpu") which ran really slowly.I'm not sure what I did wrong!