i have a job where one dataset is joined with multiple datasets. To not incur the cost of reading from source every time i have created GlobalTempView of the dataset. In hdfs the dataset is stored in 700 partitions but in spark we have default number of partitions as 200. I want to cache the repartitioned view of dataset, but i am not able to achieve it. below is the code sample

Dataset<Row> accountToUserIdDataset = hdfsClient.loadDataSet("UserDataSetPath"); //<-700 partitions

accountToUserIdDataset = accountToUserIdDataset.repartition(200); //repartitions so that we dont have to repartitions on every shuffle.

accountToUserDetailsDataset.createOrReplaceGlobalTempView("USERID_TO_DETAILS"); //so that we would be able to join with userdetails in multiple spark sessions created using newSession()

accountToUserIdDataset.cache();

accountToUserDetailsDataset.count(); //<- so that we force the materialisation of cache.

// across different spark sessions join with accountToUserIdDataset.

With the above code, when i am trying to do join with accountToUserIdDataset,

- hdfsClient.loadDataSet("UserDataSetPath") is reused across multiple joins (confirmed by seeing 700 stage skipped) but the repartitioning is happening in all the tasks.

- the storage tab shows accountToUserDetailsDataset cached with 200 partitions.

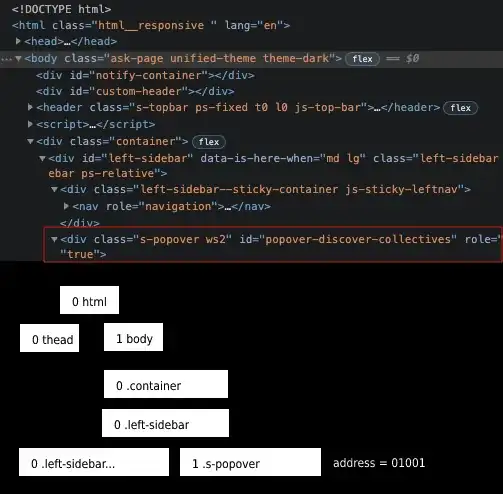

- below is a screenshot of one job, stage7 is where read from hdfs is happening and it is skipped. In stage 28 we are repartitioning to 200 partitions and then caching it. I was expecting stage 28 to be skipped as well.

Is this my understanding gap or is it possible to cache partitioned dataset? i have gone through this and this answer as well. Another possible reason of this could be that in the joins we are only interested in subset of columns of accountToUserDetailsDataset, because of this spark is having to reshuffle the dataset?