I'm trying to deploy a mlflow model locally using azure sdk for python. I'm following this example https://github.com/Azure/azureml-examples/blob/main/sdk/python/endpoints/online/mlflow/online-endpoints-deploy-mlflow-model.ipynb and this https://github.com/Azure/azureml-examples/blob/main/sdk/python/endpoints/online/managed/debug-online-endpoints-locally-in-visual-studio-code.ipynb.

My dir structure looks like this:

- keen_test

+- model

| +- artifacts

| | - _model_impl_0s5d99i3.pt

| | - settings.json

| +- conda.yaml

| +- MLmodel

| +- python_env.yaml

| +- python_model.pkl

| '- requirements.txt

'- deploy-keen.ipynb

MLmodel file:

artifact_path: model

flavors:

python_function:

artifacts:

model:

path: artifacts/_model_impl_0s5d99i3.pt

# uri: /mnt/azureml/cr/j/1393df3add7949989e16b359b8b4fd0c/exe/wd/_model_impl_0s5d99i3.pt

settings:

path: artifacts/settings.json

# uri: /mnt/azureml/cr/j/1393df3add7949989e16b359b8b4fd0c/exe/wd/tmpdy7crhkb/settings.json

cloudpickle_version: 2.2.1

env:

conda: conda.yaml

virtualenv: python_env.yaml

loader_module: mlflow.pyfunc.model

python_model: python_model.pkl

python_version: 3.8.10

mlflow_version: 2.2.2

model_uuid: 8fba816341fe4ddabac63e552e62874a

run_id: keen_drain_w43g3fq4t6_HD_1

signature:

inputs: '[{"name": "image", "type": "string"}]'

outputs: '[{"name": "filename", "type": "string"}, {"name": "boxes", "type": "string"}]'

utc_time_created: '2023-05-25 22:11:54.553781'

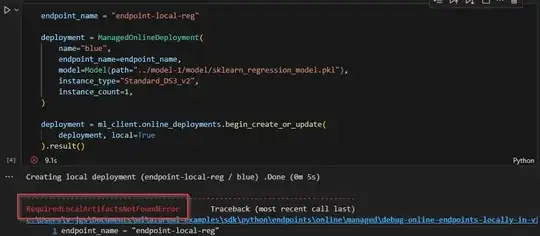

For deployment I use the following commands:

# create a blue deployment

model = Model(

path="keen_test/model",

type="mlflow_model",

description="my sample mlflow model",

)

blue_deployment = ManagedOnlineDeployment(

name="blue",

endpoint_name=online_endpoint_name,

model=model,

instance_type="Standard_F4s_v2",

instance_count=1,

)

When I try to run this:

ml_client.online_deployments.begin_create_or_update(blue_deployment, local=True)

I get the error:

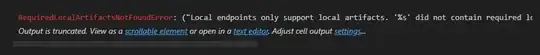

RequiredLocalArtifactsNotFoundError: ("Local endpoints only support local artifacts. '%s' did not contain required local artifact '%s' of type '%s'.", 'Local deployment (endpoint-06221317698387 / blue)', 'environment.image or environment.build.path', "")

I tried to modify the artifact_path in MLmodel configuration, but nothing worked. What should I modify in my configuration to make local deployment working? Do You have any ideas and/or experience with local deployment of mlflow models with azure python sdk?

.

.