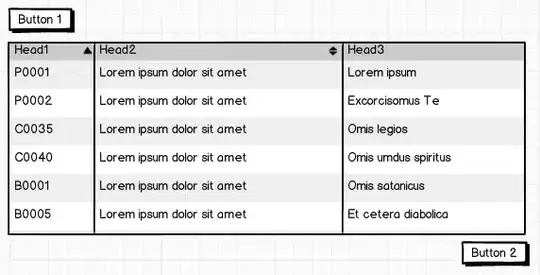

Consider the following BiLSTM diagram for timeseries prediction:

I believe this can easily be applied to train dataset but I do not think this is possible for the test dataset. The reverse LSTM layer learns from the future values and passes the hidden state to the previous sample. So why so many research studies use BiLSTM for timeseries prediction?