I have a large and small 2d numpy boolean array. I want to know all positions where this smaller array fits on the larger array. If for a specific location no element of the smaller and (sliced) larger array are True at the same time, the result should be True. See it as trying to place an object onto an image without having any overlap with any other items on the image.

For convenience I chose that the result array is indicating the left-top coordinate of where to place the small array and the result array has the same shape as the large array.

I want to optimize this for speed, for this I tried a lot. The most simple approach was to use pytorch:

import torch

import torch.nn.functional as F

def get_placement_options(large_array, small_array):

shape = large_array.shape

# Convert numpy arrays to PyTorch tensors

large_array = torch.from_numpy(large_array).to('cuda:0').float()

small_array = torch.from_numpy(small_array).to('cuda:0').float()

# Convolve symbol over the large_grid

possible_locations = (F.conv2d(large_array[None, None], small_array[None, None])[0, 0] < .5).cpu().numpy()

result = np.zeros(shape, dtype='bool')

result[:possible_locations.shape[0], :possible_locations.shape[1]] = possible_locations

return result

But I wanted it faster and I was thinking of bitbacking the booleans into an int64. For this new approach I used cupy and wrote my own kernel. This approach does cost more memory but for my use case this is oke, since typically the large array is around (1000x1000) and the smaller array is like (100x100)

I also used a technique called "bitpacking" to efficiently compare patches of 8x8 (int64) using the bitwise AND operator. So the smaller array is bitpacked into int64, for the larger array I can also do this, but have to do it for every 8x8 shift, hence the larger memory usage. Then on the GPU I can very efficiently use the AND operator between two int64 number and if it is not zero, immediately stop for that location.

import cupy as cp

import numpy as np

import time

class Placer:

def __init__(self):

kernel_code = """

extern "C" {

__global__ void compute(long long int* large_array, long long int* small_array, int* result, int rn, int rm, int n, int m, int k, int l) {

int i = blockIdx.x * blockDim.x + threadIdx.x; // x position in large array we are calculating

int j = blockIdx.y * blockDim.y + threadIdx.y; // y position in large array we are calculating

if (i <= rn && j <= rm) {

int r_i = i % 8; // Which x shift we are looking at

int r_j = j % 8; // Which y shift we are looking at

int sub_array_index = r_i * 8 * n * m + r_j * n * m;

for (int p = 0; p < k; ++p) {

for (int q = 0; q < l; ++q) {

if ((small_array[p * l + q] & large_array[sub_array_index + ((i / 8)+p) * m + (j / 8)+q]) != 0) {

result[i * rm + j] = 0;

return;

}

}

}

}

}

}

"""

# Compile the kernel code

self.compiled_kernel = cp.RawKernel(kernel_code, 'compute')

def __call__(self, large_array: np.ndarray, small_array: np.ndarray):

# Result placement coordinates will be left top

result_np = np.zeros_like(large_array)

# Make sure small array divisible by 8, add same extra padding to large array

padding = ((0, (8 - small_array.shape[0] % 8) % 8), (0, (8 - small_array.shape[1] % 8) % 8))

small_array = np.pad(small_array, padding, mode='constant', constant_values=False)

K, L = small_array.shape

large_array = np.pad(large_array, padding, mode='constant', constant_values=True)

# Make sure large array divisible by 8

padding = ((0, (8 - large_array.shape[0] % 8) % 8), (0, (8 - large_array.shape[1] % 8) % 8))

large_array = np.pad(large_array, padding, mode='constant', constant_values=True)

N, M = large_array.shape

# Creating all 64 shifts and packing them into int64 (on the gpu)

large_array_cp = cp.array(large_array)

large_array_cp = cp.pad(self.sliding_window_view_cp(large_array_cp, (8, 8)), ((0, 7), (0, 7), (0, 0), (0, 0)), 'constant', constant_values=True).transpose((2, 3, 0, 1)).copy()

large_array_cp = cp.packbits(large_array_cp.transpose((0, 1, 3, 2))).reshape(8, 8, large_array.shape[1], large_array.shape[0] // 8)

large_array_cp = large_array_cp.transpose((0, 1, 3, 2)).copy().view('int64')

# Convert the small array into int64 as well

small_array = cp.array(np.packbits(small_array.copy(), axis=0).view(np.int64))

# Call the kernel function

block = (32, 32, 1)

grid = ((N-K+1 + block[0] - 1) // block[0], (M-L+1 + block[1] - 1) // block[1])

result = cp.ones((N-K+1, M-L+1), dtype=cp.int32)

self.compiled_kernel(grid=grid, block=block, args=(large_array_cp, small_array, result, N-K+1, M-L+1, N // 8, M // 8, K // 8, L // 8))

# Ensure the GPU has finished processing

cp.cuda.stream.get_current_stream().synchronize()

result = result.astype(cp.bool_).get()

result_np[:result.shape[0], :result.shape[1]] = result

return result_np

@staticmethod

def sliding_window_view_cp(arr, window_shape):

output_shape = arr.shape[:-len(window_shape)] + tuple(i - j + 1 for i, j in zip(arr.shape[-len(window_shape):], window_shape))

strides = arr.strides + arr.strides[-len(window_shape):]

return as_strided(arr, shape=output_shape + window_shape, strides=strides)

Although I think in theory this approach should be faster, it is approximately as fast as the first approach. Probably misses some CUDA optimization.

To test I used

large_array = np.random.choice(a=[False, True], size=(1000, 1000))

small_array = np.zeros((100, 100), dtype=bool)

small_array[-4:, -4:] = True

large_array[-260:, -260:] = False

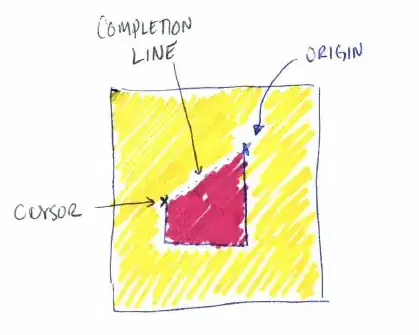

which give the following valid locations:

The first method took .06 seconds, the second method took .05 seconds.

I can't really believe that nobody has done this before. I think there is a speedup possible but I can't find the library to do this. Anyone has any tips, ideas how to make this faster?