I use Azure databricks and databricks CLI to manage it. When I try to copy some local folders and files to databricks DBFS, at first folder it copied with folder and files ( as same as local ). But for the second folders, it just copied files in it not the folder, so it looks something below.

Imagine my folder named 'animal' contains two subfolders like below and I tried to copy animal folder with recursive true.

animal folder contains below subfolders and files in it:

- animal\land\gorilla.txt

- animal\land\tiger.txt

- animal\land\lion.txt

animal folder contains below subfolders and files in it:

- animal\sea\fish.txt

- animal\sea\shark.txt

- animal\sea\whale.txt

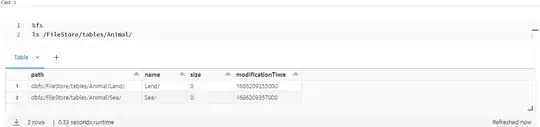

Above are saved as below in databricks dbfs:

- animal\land\gorilla.txt

- animal\land\tiger.txt

- animal\land\lion.txt

- animal\fish.txt

- animal\shark.txt

- animal\whale.txt

Below is my command,

databricks fs cp -r 'C:myname/work/animal/' dbfs:/earth/organism

I expect something below in databricks dbfs,

- /earth/organism/land/gorilla.txt (and all landfiles)

- /earth/organism/sea/whale.txt (and all sea files but sea folder not even exist )

What Iam doing wrong, Can someone correct me?

At Databricks DBFS:

At Databricks DBFS: