Im currently hyper parameter tuning my model and returning the model with the least amount of error. Before I start the hyper parameter tuning process I ensure my validation and test data is is weighted correctly by removing columns they may occur the most. This is that code

#Get the weight

vali_weight = np.unique(y_validation, return_counts=True)[1]

test_weight = np.unique(y_test, return_counts=True)[1]

#Calculate how many need to removed

vali_remove_count = vali_weight[0] - vali_weight[1]

test_remove_count = test_weight[0] - test_weight[1]

#Re-merge data

#Validation

xv = X_validation.copy()

xv["TARGET"] = y_validation

xv = xv.drop(xv.query('TARGET == 0').sample(vali_remove_count).index)

#Test

xt = X_test.copy()

xt["TARGET"] = y_test

xt = xt.drop(xt.query('TARGET == 0').sample(test_remove_count).index)

#Re-split data

y_validation = xv["TARGET"]

xv.drop(columns=["TARGET"], inplace=True)

X_validation = xv.copy()

y_test = xt["TARGET"]

xt.drop(columns=["TARGET"], inplace=True)

X_test = xt.copy()

#Get the weight

vali_weight = np.unique(y_validation, return_counts=True)[1]

test_weight = np.unique(y_test, return_counts=True)[1]

In terms of the training data im using sample weights during the training process

sample_weights = compute_sample_weight(class_weight='balanced', y=y_train)

After this step is complete i train another model with the best parameters found during the tuning to validate everything is correct.

clf=XGBClassifier(objective = "binary:logistic",

booster="gbtree",

max_depth = bp['max_depth'],

gamma = bp['gamma'],

max_leaves = bp['max_leaves'],

reg_alpha = bp['reg_alpha'],

reg_lambda = bp['reg_lambda'],

colsample_bytree = bp['colsample_bytree'],

min_child_weight = bp['min_child_weight'],

learning_rate = bp['learning_rate'],

n_estimators = 200,#bp['n_estimators'],

subsample = bp['subsample'],

random_state = bp['seed'])

sample_weights = compute_sample_weight(class_weight='balanced',

y=y_train)

evaluation = [(x_train, y_train), (x_validation, y_validation)]

clf.set_params(

eval_metric=['aucpr', 'logloss'],

early_stopping_rounds=100

).fit(x_train, y_train,

sample_weight=sample_weights,

eval_set=evaluation, verbose=True)

train_pred = clf.predict(x_train)

vali_pred = clf.predict(x_validation)

test_pred = clf.predict(x_test)

train_err = mean_absolute_error(y_train, train_pred)

train_auc = accuracy_score(y_train, train_pred)

vali_err = mean_absolute_error(y_validation, vali_pred)

vali_auc = accuracy_score(y_validation, vali_pred)

test_err = mean_absolute_error(y_test, test_pred)

test_auc = accuracy_score(y_test, test_pred)

print(f"Train MAE: {train_err}")

print(f"Train ACC: {train_auc}")

print("--------------------------")

print(f"Validation MAE: {vali_err}")

print(f"Validation ACC: {vali_auc}")

print("--------------------------")

print(f"Test MAE: {test_err}")

print(f"Test ACC: {test_auc}")

print("--------------------------")

print(classification_report(y_test, test_pred))

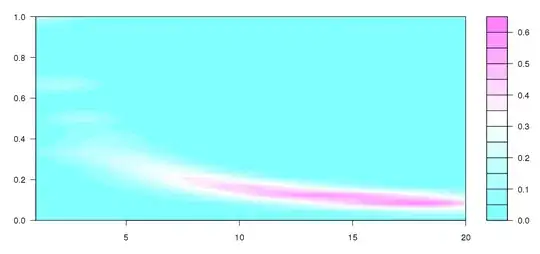

I am consistently getting very little to no movement on my validation logloss but i can see my training data is doing as expected. Without looking at my data (its private) what could be the cause of this issue?