I'm studying some models of machine learning and currently optimizing the models : I have to compare these algos on my validation set to select the best one. I use two methods with mlr3 (the code here is an example) :

1)

learner_svm = lrn("classif.svm", type = "C-classification", cost = to_tune(p_dbl(1e-5, 1e5, logscale = TRUE)), gamma = to_tune(p_dbl(1e-5, 1e5, logscale = TRUE)), kernel = to_tune(c("polynomial", "radial")), degree = to_tune(1, 4), predict_type = "prob")

learner_rpart = lrn("classif.rpart", cp = to_tune(0.0001,1), minsplit = to_tune(1, 60), maxdepth = to_tune(1, 30), minbucket = to_tune(1,60), predict_type = "prob")

learner_xgboost = lrn("classif.xgboost", predict_type = "prob", nrounds = to_tune(1, 5000), eta = to_tune(1e-4, 1, logscale = TRUE), subsample = to_tune(0.1,1), max_depth = to_tune(1,15), min_child_weight = to_tune(0, 7), colsample_bytree = to_tune(0,1), colsample_bylevel = to_tune(0,1), lambda = to_tune(1e-3, 1e3, logscale = TRUE), alpha = to_tune(1e-3, 1e3, logscale = TRUE))

learner_kknn = lrn("classif.kknn", predict_type = "prob", k = to_tune(1, 30))

learner_ranger = lrn("classif.ranger", predict_type = "prob", num.trees = to_tune(1, 2000), mtry.ratio = to_tune(0, 1), sample.fraction = to_tune(1e-1, 1), importance = "impurity")

learner_glmnet = lrn("classif.glmnet", predict_type = "prob")

set.seed(1234)

at_xgboost = auto_tuner(

tuner= tnr("random_search"),

learner = learner_xgboost,

resampling = resampling_inner,

measure = msr("classif.auc"),

term_evals = 20,

store_tuning_instance = TRUE,

store_models = TRUE

)

set.seed(1234)

at_ranger = auto_tuner(

tuner= tnr("random_search"),

learner = learner_ranger,

resampling = resampling_inner,

measure = msr("classif.auc"),

term_evals = 20,

store_tuning_instance = TRUE,

store_models = TRUE

)

set.seed(1234)

at_rpart = auto_tuner(

tuner= tnr("random_search"),

learner = learner_rpart,

resampling = resampling_inner,

measure = msr("classif.auc"),

term_evals = 20,

store_tuning_instance = TRUE,

store_models = TRUE

)

set.seed(1234)

at_svm = auto_tuner(

tuner= tnr("random_search"),

learner = learner_svm,

resampling = resampling_inner,

measure = msr("classif.auc"),

term_evals = 20,

store_tuning_instance = TRUE,

store_models = TRUE

)

at_glmnet = auto_tuner(

tuner= tnr("random_search"),

learner = learner_xgboost,

resampling = resampling_inner,

measure = msr("classif.auc"),

term_evals = 20,

store_tuning_instance = TRUE,

store_models = TRUE

)

measures = msrs(c("classif.auc", "classif.bacc", "classif.bbrier"))

learners = c(lrn("classif.featureless"), at_rpart, at_ranger, at_xgboost, at_svm, at_glmnet)

set.seed(1234)

design = benchmark_grid(

tasks = task,

learners = learners,

resamplings = resampling_outer)

bmr = benchmark(design,store_models = TRUE)

results <- bmr$aggregate(measures)

print(results)

archives = extract_inner_tuning_archives(bmr)

inner_learners = map(archives$resample_result, "learners")

To better understand the code : -data = my native normalized dataset. -task = my data with my target : "LesionResponse" -resampling outer = I divide my whole dataset in train (comprising train + validation) and test. -resampling inner = I divide my train into train and validation (for hyper-parameters optimization).

Here I use the auto tuning with an extraction of the results for my validation set in order to compare them. I extract the best results for each learner and put it in a table.

- My second method is the following one :

set.seed(1234)

#Auto tuning elastic net

learner_glmnet = lrn("classif.glmnet", predict_type = "prob")

measure = msr("classif.auc")

rr_glmnet = tune_nested(

tuner = tnr("random_search"),

task = task,

learner = learner_glmnet,

inner_resampling = resampling_inner,

outer_resampling = resampling_outer,

measure = msr("classif.auc"),

term_evals = 20,

store_models = TRUE,

terminator = trm("none")

)

glmnet_results<-extract_inner_tuning_results(rr_glmnet)[, .SD, .SDcols = !c("learner_param_vals", "x_domain")]

set.seed(1234)

#Auto tuning Ranger

learner_ranger = lrn("classif.ranger", predict_type = "prob", num.trees = to_tune(1, 2000), mtry.ratio = to_tune(0, 1), sample.fraction = to_tune(1e-1, 1), importance = "impurity")

rr_ranger = tune_nested(

tuner = tnr("random_search"),

task = task,

learner = learner_ranger,

inner_resampling = resampling_inner,

outer_resampling = resampling_outer,

measure = msr("classif.auc"),

term_evals = 20,

store_models = TRUE,

terminator = trm("none")

)

ranger_results<-extract_inner_tuning_results(rr_ranger)[, .SD, .SDcols = !c("learner_param_vals", "x_domain")]

set.seed(1234)

#Auto tuning knn

learner_kknn = lrn("classif.kknn", predict_type = "prob", k = to_tune(1, 30))

rr_kknn = tune_nested(

tuner = tnr("random_search"),

task = task,

learner = learner_kknn,

inner_resampling = resampling_inner,

outer_resampling = resampling_outer,

measure = msr("classif.auc"),

term_evals = 20,

store_models = TRUE,

terminator = trm("none")

)

kknn_results<-extract_inner_tuning_results(rr_kknn)[, .SD, .SDcols = !c("learner_param_vals", "x_domain")]

set.seed(1234)

#Auto tuning xgboost

learner_xgboost = lrn("classif.xgboost", predict_type = "prob", nrounds = to_tune(1, 5000), eta = to_tune(1e-4, 1, logscale = TRUE), subsample = to_tune(0.1,1), max_depth = to_tune(1,15), min_child_weight = to_tune(0, 7), colsample_bytree = to_tune(0,1), colsample_bylevel = to_tune(0,1), lambda = to_tune(1e-3, 1e3, logscale = TRUE), alpha = to_tune(1e-3, 1e3, logscale = TRUE))

rr_xgboost = tune_nested(

tuner = tnr("random_search"),

task = task,

learner = learner_xgboost,

inner_resampling = resampling_inner,

outer_resampling = resampling_outer,

measure = msr("classif.auc"),

term_evals = 20,

store_models = TRUE,

terminator = trm("none")

)

xgboost_results<-extract_inner_tuning_results(rr_xgboost)[, .SD, .SDcols = !c("learner_param_vals", "x_domain")]

set.seed(1234)

#Auto tuning rpart

learner_rpart = lrn("classif.rpart", cp = to_tune(0.0001,1), minsplit = to_tune(1, 60), maxdepth = to_tune(1, 30), minbucket = to_tune(1,60), predict_type = "prob")

rr_rpart = tune_nested(

tuner = tnr("random_search"),

task = task,

learner = learner_rpart,

inner_resampling = resampling_inner,

outer_resampling = resampling_outer,

measure = msr("classif.auc"),

term_evals = 20,

store_models = TRUE,

terminator = trm("none")

)

rpart_results<-extract_inner_tuning_results(rr_rpart)[, .SD, .SDcols = !c("learner_param_vals", "x_domain")]

set.seed(1234)

#Auto tuning svm

learner_svm = lrn("classif.svm", type = "C-classification", cost = to_tune(p_dbl(1e-5, 1e5, logscale = TRUE)), gamma = to_tune(p_dbl(1e-5, 1e5, logscale = TRUE)), kernel = to_tune(c("polynomial", "radial")), degree = to_tune(1, 4), predict_type = "prob")

rr_svm = tune_nested(

tuner = tnr("random_search"),

task = task,

learner = learner_svm,

inner_resampling = resampling_inner,

outer_resampling = resampling_outer,

measure = msr("classif.auc"),

term_evals = 20,

store_models = TRUE,

terminator = trm("none")

)

svm_results<-extract_inner_tuning_results(rr_svm)[, .SD, .SDcols = !c("learner_param_vals", "x_domain")]

I pick up the results for the inner resampling optimization and put it in a table. Problem : Are these methods giving the same results (I basically do the same things finally...) ? No and I don't understand why... With the first method, I have the wgboost giving me the best results, and for the second one, it is the svm with different auc...

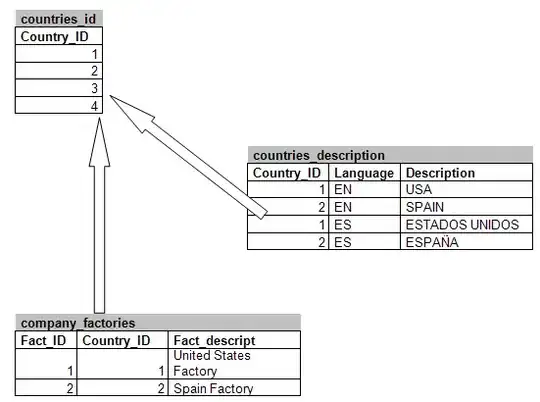

EDIT : Concerning the results obtained, here is two tables, the first one is the results with the first method

And then the results with the second method

It's a little embarrassing since I must choose the best model to test on my test set

EDIT : Lars gave me the answer, it was all the result of hazard and seed...

Next question is : How to have a confidence interval in this particularly case ??