Context:

I have the following dataset:

Goal: I want to write it on my disk. I am using chunks so the dataset does not kill my kernel.

Problem: I tried to save it on my disk with chunks using:

- Option 1:

to_zarr-> biggest homogeneous chunks possible:{'mid_date':41, 'x':379, 'y':1} - Option 2:

to_netcdf-> chunk size{'mid_date':3000, 'x':758, 'y':617} - Option 3:

to_netcdf(orto_zarr, same result) -> chunk size{'mid_date':1, 'x':100, 'y':100}

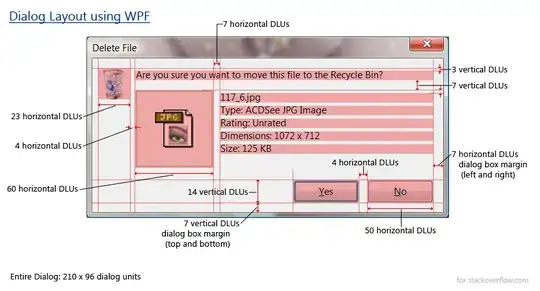

But the memory ends up blowing anyway (and I have 96Gb of RAM). Option 3 tries another approach by saving chunk by chunk, but it still blows up the memory (see screenshot). Moreover, it strangely seems to take longer and longer to process chunks as they are written on disk. Do you have a suggestion on how I could solve this problem ?

In the screenshot, I would be expecting 1 line of # per file, but on Chunk 2 already, it seems it's saving multiple chunks at once (3 lines of #). The size of chunk 2 was 502kb.

Code:

import xarray as xr

import os

import sys

from dask.diagnostics import ProgressBar

import numpy as np

xrds = #massive dataset

pathsave = 'Datacubes/'

#Option 1, did not work

#write_job = xrds.to_zarr(f"{pathsave}Test.zarr", mode='w', compute=False, consolidated=True)

#Option 2, did not work (with chunk size {'mid_date':3000, 'x':100, 'y':100})

#write_job = xrds.to_netcdf(f"test.nc",compute=False)

#with ProgressBar():

# print(f"Writing to {pathsave}")

# write_job = write_job.compute()

# Option 3, did not work. That's the option I took the screenshot from

# I force the chunks to be really small so I don't overload the memory

chunk_size = {'mid_date':1, 'y':xrds.y.shape[0], 'x':xrds.x.shape[0]}

with ProgressBar():

for i, (key, chunk) in enumerate(xrds.chunk(chunk_size).items()):

chunk_dataset = xr.Dataset({key: chunk})

chunk_dataset.to_netcdf(f"{pathsave}/chunk_{i}.nc", mode="w", compute=True)

print(f"Chunk {i+1} saved.")