I am trying to set up a node/react setup that streams results from openai. I found an example project that does this but it is using next.js. I am successfully making the call and the results are returning as they should, however, the issue is how to return the stream to the client. Here is the code that works in next.js

import {

GetServerSidePropsContext,

} from 'next';

import { DEFAULT_SYSTEM_PROMPT, DEFAULT_TEMPERATURE } from '@/utils/app/const';

import { OpenAIError, OpenAIStream } from '@/utils/server';

import { ChatBody, Message } from '@/types/chat';

// @ts-expect-error

import wasm from '../../node_modules/@dqbd/tiktoken/lite/tiktoken_bg.wasm?module';

import tiktokenModel from '@dqbd/tiktoken/encoders/cl100k_base.json';

import { Tiktoken, init } from '@dqbd/tiktoken/lite/init';

const handler = async (

req: GetServerSidePropsContext['req'],

res: GetServerSidePropsContext['res'],

): Promise<Response> => {

try {

const { model, messages, key, prompt, temperature } = (await (

req as unknown as Request

).json()) as ChatBody;

-(

(await init((imports) => WebAssembly.instantiate(wasm, imports)))

);

console.log({ model, messages, key, prompt, temperature })

const encoding = new Tiktoken(

tiktokenModel.bpe_ranks,

tiktokenModel.special_tokens,

tiktokenModel.pat_str,

);

let promptToSend = prompt;

if (!promptToSend) {

promptToSend = DEFAULT_SYSTEM_PROMPT;

}

let temperatureToUse = temperature;

if (temperatureToUse == null) {

temperatureToUse = DEFAULT_TEMPERATURE;

}

const prompt_tokens = encoding.encode(promptToSend);

let tokenCount = prompt_tokens.length;

let messagesToSend: Message[] = [];

for (let i = messages.length - 1; i >= 0; i--) {

const message = messages[i];

const tokens = encoding.encode(message.content);

if (tokenCount + tokens.length + 1000 > model.tokenLimit) {

break;

}

tokenCount += tokens.length;

messagesToSend = [message, ...messagesToSend];

}

encoding.free();

const stream = await OpenAIStream(

model,

promptToSend,

temperatureToUse,

key,

messagesToSend,

);

return new Response(stream);

} catch (error) {

console.error(error);

if (error instanceof OpenAIError) {

return new Response('Error', { status: 500, statusText: error.message });

} else {

return new Response('Error', { status: 500 });

}

}

};

export default handler;

OpenAIStream.ts

const res = await fetch(url, {...});

const encoder = new TextEncoder();

const decoder = new TextDecoder();

const stream = new ReadableStream({

async start(controller) {

const onParse = (event: ParsedEvent | ReconnectInterval) => {

if (event.type === 'event') {

const data = event.data;

try {

const json = JSON.parse(data);

if (json.choices[0].finish_reason != null) {

controller.close();

return;

}

const text = json.choices[0].delta.content;

const queue = encoder.encode(text);

controller.enqueue(queue);

} catch (e) {

controller.error(e);

}

}

};

const parser = createParser(onParse);

for await (const chunk of res.body as any) {

parser.feed(decoder.decode(chunk));

}

},

});

return stream;

When trying to this up in node the first issue I ran into is "ReadableStream" is undefined. I solved it using a polyfill

import { ReadableStream } from 'web-streams-polyfill/ponyfill/es2018';

When I log

const text = json.choices[0].delta.content;

It shows that the multiple responses from the API are being returned correctly.

Instead of using returning the data using new Response I am using:

import { toJSON } from 'flatted';

export const fetchChatOpenAI = async (

req: AuthenticatedRequest,

res: Response

) => {

try {

const stream = await OpenAIStream(

model,

promptToSend,

temperatureToUse,

key,

messagesToSend

);

res.status(200).send(toJSON(stream));

} catch (error) {

if (error instanceof OpenAIError) {

console.error(error);

res.status(500).json({ statusText: error.message });

} else {

res.status(500).json({ statusText: 'ERROR' });

}

}

};

In the client here is how the response is being handled.

const controller = new AbortController();

const response = await fetch(endpoint, {

method: 'POST',

headers: {

'Content-Type': 'application/json',

},

signal: controller.signal,

body: JSON.stringify(chatBody),

});

if (!response.ok) {

console.log(response.statusText);

} else {

const data = response.body;

if (data) {

const reader = data.getReader();

const decoder = new TextDecoder();

let done = false;

let text = '';

while (!done) {

const { value, done: doneReading } = await reader.read();

console.log(value);

done = doneReading;

const chunkValue = decoder.decode(value);

console.log(chunkValue);

text += chunkValue;

}

}

}

When running the next.js project here is a sample output from those logs.

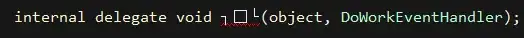

In my Node version here is a screenshot of what those logs look like