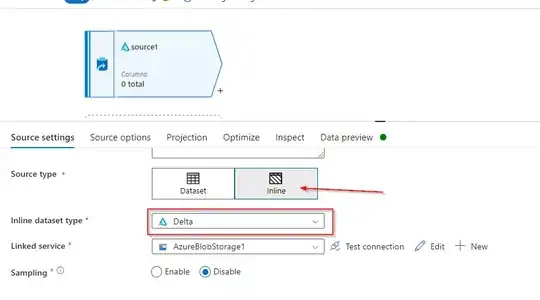

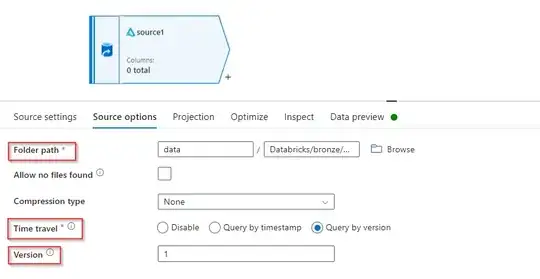

I have a delta file (consisting of meta data and fragmented parquet files) that I save with databricks to Azure Blob Storage. Later, I am trying to read that file with Azure Data Factory Pipeline but when using copy activity it reads all the data in that delta instead of the latest version (as specified by meta data).

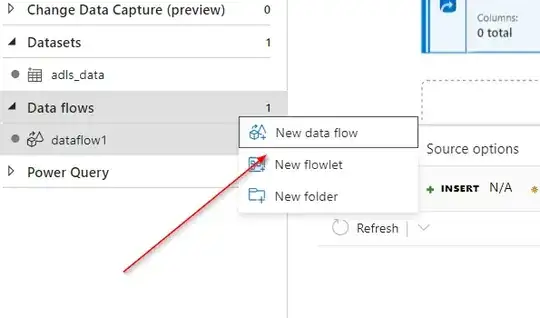

How do I just read one version from delta file on a blob storage?