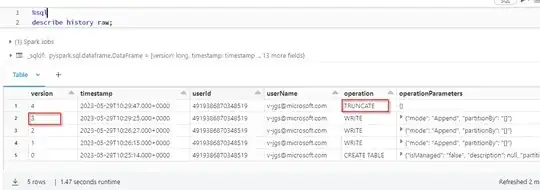

I created a delta table in Databricks using sql as:

%sql

create table nx_bronze_raw

(

`Device` string

)

USING DELTA LOCATION '/mnt/Databricks/bronze/devices/';

Then I ingest data (device column) into this table using:

bronze_path = '/mnt/Databricks/bronze/devices/'

df.select('Device').write.format("delta").mode("append").save(bronze_path)

The underlying storage is Azure Blob Storage, and the Databricks runtime is 12.1

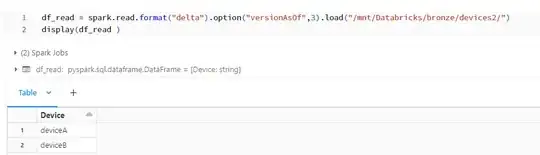

The problem is when I query this table it returns 0 records:

df_read = spark.read.format("delta").load("/mnt/Databricks/bronze/devices/")

display(df_read )

Query returned no results

Although, when I look inside the storage account, the delta files are created with the expected size:

What went wrong in this scenario, especially no error is returned ? and why can't I retrieve the data ?