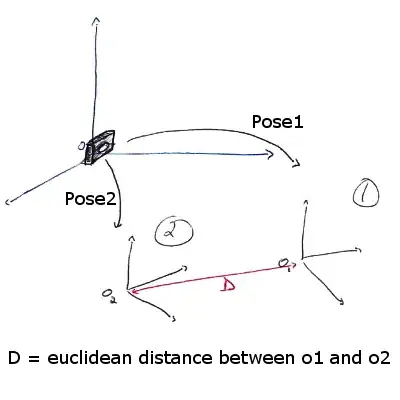

I am trying to train Yolov8 to detect black dots on human skin. An example of skin and markup is shown below. I've cropped images to 256x256 pixels, selected those crops that have at least one label and got a train, test and validation datasets (4000, 2000 and 2000 images respectively).

A segmentation model from segmentation_models_pytorch predicts black dots with IoU=0.15. This is decent, given that the markup is rectangular (while after image rotation augmentation the best guess is a circle) and that the object doesn't have a clear boundary (unlike a car or a chair).

I've tried training Yolov8 with command

yolo detect train data=D:\workspace\ultralytics\my_coco.yaml model=yolov8n.yaml epochs=100 imgsz=256 workers=2 close_mosaic=100 project='bd' flipud=0.5 mosaic=0.0 Each epoch reports

Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size

19/100 1.44G nan nan nan 372 256: 100%|██████████| 267/267 [00:44<00:00, 5.96it/s]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 44/44 [00:06<00:00, 7.06it/s]

all 1391 26278 0 0 0 0

Full output can be found here

A different command yields better results yolo detect train data=D:\workspace\ultralytics\my_coco.yaml model=yolov8n.pt epochs=100 imgsz=256 workers=2 close_mosaic=0 project='bd' flipud=0.5 mosaic=0.5

Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size

1/100 1.56G nan nan nan 389 256: 100%|██████████| 267/267 [00:44<00:00, 5.96it/s]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 44/44 [00:05<00:00, 8.08it/s]

all 1391 26278 0.0118 0.00202 0.00747 0.00278

....

Epoch GPU_mem box_loss cls_loss dfl_loss Instances Size

4/100 1.54G nan nan nan 165 256: 100%|██████████| 267/267 [00:43<00:00, 6.12it/s]

Class Images Instances Box(P R mAP50 mAP50-95): 100%|██████████| 44/44 [00:05<00:00, 7.65it/s]

all 1391 26278 0.0118 0.00202 0.00747 0.00278

I still get nan for training after epoch and some values during epoch. For example: 6/100 1.05G 3.279 2.164 0.937 565 256: 47%|████▋ | 126/267 [00:20<00:22, 6.22it/s] Also, note that validation gets stuck at values from the first epoch.