I have an EKS cluster running with Karpenter provisioning. Everything worked as expected, but when I used AWS FIS to simulate spot instances interruption, I faced a weird behavior - new nodes provisioned, but half of the new nodes were stuck in not ready forever.

As you see in the below picture, 3 in 6 nodes are stuck in NotReady status, even using the same launch template, and worked fine in normal scaling, deprovisioning cases (like manual terminate ec2 spot instances, scale up and down pod). When I had 2 new nodes provisioned, then 1 of them got stuck.

Here is my Provisioner

apiVersion: karpenter.sh/v1alpha5

kind: Provisioner

metadata:

name: default

spec:

providerRef:

name: default

tags:

karpenter.sh/discovery: finpath-dev

labels:

billing-team: my-team

annotations:

example.com/owner: "my-team"

requirements:

- key: kubernetes.io/os

operator: In

values: ["linux"]

- key: "node.kubernetes.io/instance-type"

operator: In

values: ["t3.small", "t3a.small", "t3.medium", "t3a.medium" ]

# values: ["t3.medium", "t3a.medium" ]

- key: "kubernetes.io/arch"

operator: In

values: ["amd64"]

- key: "karpenter.sh/capacity-type"

operator: In

values: ["spot", "on-demand"]

limits:

resources:

cpu: "100"

memory: 100Gi

consolidation:

enabled: true

ttlSecondsUntilExpired: 10800 # 3 hours

weight: 10

And one weird thing is my launch template include userdata that add my ssh public key to node then I can ssh later, but it worked (can ssh to node) only for nodes that ready, and the nodes are in NotReady status were not (Even ec2 state is running - I got Permission denied (publickey,gssapi-keyex,gssapi-with-mic))

Does anyone have any suggestions. Thank you in advance!

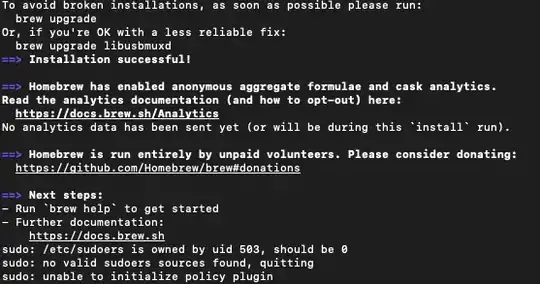

FIXED

After half of the day, I figured it out by waiting for the instances up in 5 minutes, and ssh again. Then I saw the error in kubelet log (journalctl -u kubelet) that indicate kubelet can not list instances ("error listing AWS instances: "RequestError: send request failed caused by: Post ec2.us-west-2.amazonaws.com: dial tcp 54.240.249.157:443: i/o timeout"). That was my stupid setup when some of my new nodes provisioned in a public subnet, but they don't have any public IP, so I removed the public subnet from karpenter selector subnet.