Q : "Is it possible correct answer for this question that if I have cloud with 1 vCPU and cloud with 2 vCPU, then applications which will be running at the 2vCPU will be 2x faster?"

No, it will never be so.

Why?

because Amdahl's law has explained, that any speedup is limited by an amount of serial-fraction of processing (serial-fraction being the very one, that cannot be split among no matter how many additional resources)

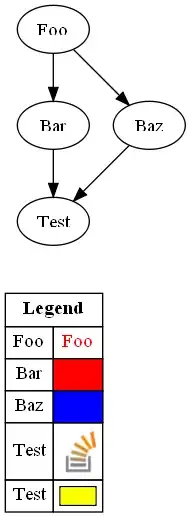

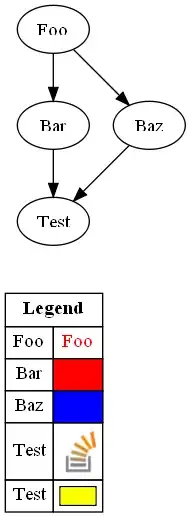

Reading the graph( 1 ) for 2 CPUs shows, that unless the Process-under-Test enjoys a "Parallel portion" with zero add-on overhead costs with full, non-blocking, 100% independence for a true-[PARALLEL] execution (on an exclusively pre-reserved, non-shared, internally not cannibalised (for commercial reasons) by hidden to most nonprofessional users CPU-work-stealing tricks) -- all of which never happens in real life -- all other cases never reach a speedup of 2.00 x

Some schoolbook examples omit the real costs of add-on overheads and show promising speedup expectations, yet a first experiment can show a rather unpleasant contrast, once these distribution/recollection overhead costs and other adverse effects get accounted for and a result can quite often show a "negative" speedup, which is actually a slowdown of performance, as one pays way more (for going into parallel-portion distribution/recollection add-on costs) than one will ever get back (from a chance to use 2, 3, N-many (v)CPU-s to process the actual useful-work to get computed ). Some computing strategies are simply way faster in a pure-[SERIAL] mode of execution, than if one tried to add some additional code for allowing the same useful-work to be re-arranged for a just-[CONCURRENT] and/or true-[PARALLEL] execution of some parts of the whole computing across some more resources available where-&-when/if-&-how these seem to appear to us as being free to be loaded and used by our code.

Remarks:

1)

This "classical" graph is both true and wrong. True for such abstract systems' processing, where dividing the amount of work bears zero additional costs ( not a single instruction added for doing the distributing of the work to perform and neither a single instruction needed for then recollecting the results of split execution of the work-units ). The same graph is wrong in that it also assumes infinitely divisible work-units, that seem to be a single-instruction "work" and so can be freely redistributed into any amount of processors, which is never the case ( if for nothing else then for the add-on overhead costs of doing that distribution and recollection - no teleportation, even of such tiny things as a piece of information is, performed in zero-time, at zero-energy costs is known so far in May-2023 on our Mother Earth, so ... it costs a lot to adjust overhead costs spent for such an infinitely parallelisable single-instruction distribution over even (in)finite amount of free computing resources (read: vCPU, CPU, QCPU, whatever). This is the reason we always end up with blocks of work-units, that are, by definition indivisible for further splits and remain serially-executed on a given computing resource -- this is called atomicity-of-work -- which will never get accelerated by split-work, even if zillions of free processors were available for such "speedup", right because the work-unit is further indivisible and atomicity-of-work does not allow us to consider those free resources to be able to somehow contribute to any speedup, so they remain free, not used, idling NOP-s or doing other concurrent workloads' processing of some other applications in the real clouds, but not contributing at the moment, to any acceleration / speedup of our Process-under-Test )