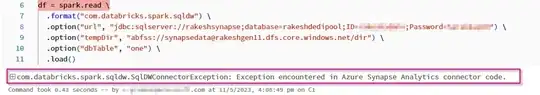

I am trying to use below code to write the data to synapse dedicated sql pool table.

The Data is stored in ADLS Gen2 and I am trying to write a dataframe into a sql table

I also have a service principal created for Azure Databricks that I am also using in Synapse as db_owner,

While running the code - I get below error:

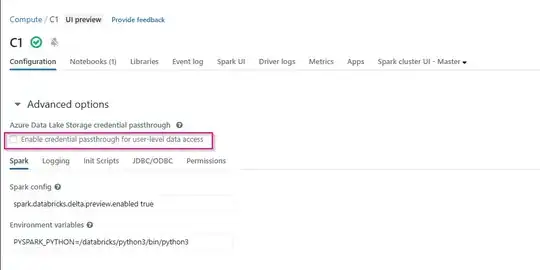

java.lang.IllegalArgumentException: Could not retrieve Credential Passthrough token. Please check if Credential Passthrough is enabled

Can some please help explain what is wrong here because my cluster shows passthrough enabled