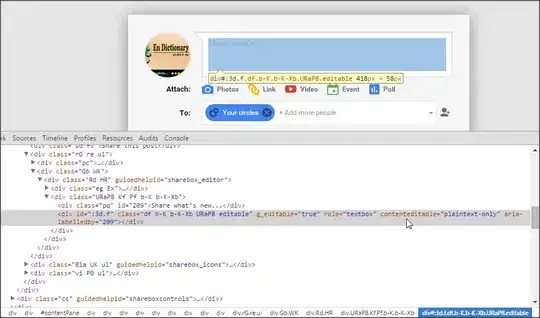

The problem is that I need to fit some data into a function (which was given by my professor, so basically I can't change it) but the fit goes poorly and I can't understand why. I also tried with lmfit method but that didn't work too. Here is the code I used and the image of the fit I get with residuals:Fit

x1 = np.array([200. , 220. , 240. , 260. , 280. , 300. , 320. , 340. ,

360. , 380. , 390. , 392.5 , 395. , 397.5 , 400. , 402.5 ,

405. , 407.5 , 410. , 411.25, 412.5 , 413.75, 414. , 415.25,

416.5 , 417.75, 418.5 , 420. , 422.5 , 425. , 427.5 , 430. ,

432.5 , 435. , 437.5 , 440. , 450. , 460. , 480. , 500. ,

510. , 520. , 540. , 560. , 570. , 580. , 600. ])

y1 = np.array([0.00787402, 0.00904762, 0.01079365, 0.01269841, 0.01539683,

0.01857143, 0.02321429, 0.0302 , 0.04303279, 0.06767896,

0.09456265, 0.10338983, 0.11410579, 0.12545932, 0.14700855,

0.16944444, 0.19692833, 0.22965779, 0.27434783, 0.28681818,

0.32079208, 0.32562814, 0.32893401, 0.33061224, 0.32727273,

0.31268293, 0.30236967, 0.28940092, 0.24274194, 0.20989011,

0.18052805, 0.15531915, 0.13259053, 0.11627907, 0.10341463,

0.07345133, 0.07455357, 0.06403509, 0.04163265, 0.03811475,

0.02948207, 0.0272 , 0.02301587, 0.02027559, 0.01968254,

0.01795276, 0.01672584])

σ_y1 = np.array([0.00021564, 0.00023222, 0.00025707, 0.00028599, 0.00032924,

0.00038243, 0.00046297, 0.00058876, 0.0008021 , 0.00127062,

0.00180749, 0.00196919, 0.00217276, 0.00239208, 0.00284333,

0.00317296, 0.00368032, 0.00432658, 0.00507593, 0.00532676,

0.0060111 , 0.00611128, 0.00618 , 0.00621495, 0.00607953,

0.0057964 , 0.0056074 , 0.00536185, 0.00463023, 0.00401191,

0.00347255, 0.00303842, 0.00250275, 0.00219007, 0.00196289,

0.0014738 , 0.00139797, 0.00129955, 0.00077378, 0.00082702,

0.00054713, 0.00053481, 0.00043752, 0.00041071, 0.0003831 ,

0.00037095, 0.00033556])

# Primo test di fit con 4 parametri

def fitfunc1(x, A, R, L, C, Off): # le frequenze 'f' sono in kHz

ω = 2*np.pi*x

δ = R/(2*L)

Ω = 1/sqrt(L*C)

return A*ω/sqrt(ω**4-2*ω**2*(Ω**2-2*δ**2)+Ω**4)

R_tot = 77.54

R_G = 48

L = 308*1E-6

C = 508*1E-9

p_init1 = [31454, R_tot-R_G, L, C] # valori iniziali di (A, R, L, C)

p_best1, cov1 = curve_fit(

fitfunc1, x1, y1, # assegno funzione di fit, ascisse e ordinate

sigma=σ_y1, # assegno gli errori sulle ordinate

p0=p_init1, bounds=(0, +np.inf) # imposto i valori iniziali dei parametri e intervalli ammessi [0, +∞)

)

#Somma dei residui e deviazione standard a posteriori

res1 = (y1-fitfunc1(x1, *p_best1))

sum_res1 = sum(res1)

σ_post1 = ma.sqrt(sum_res1/(y1.size))

print("---------------------------")

print("Best fit values:")

print("---------------------------")

print("A = (%.5f +/- %.5f) err_percentuale = %.5f" % (p_best1[0],sqrt(cov1[0,0]),sqrt(cov1[0,0])*100/p_best1[0]))

print("R = (%.5f +/- %.5f) Ω err_percentuale = %.5f" % (p_best1[1],sqrt(cov1[1,1]),sqrt(cov1[1,1])*100/p_best1[1]))

print("L = (%.5f +/- %.5f) μH err_percentuale = %.5f" % (p_best1[2]*1E+6,sqrt(cov1[2,2])*1E+6,sqrt(cov1[2,2])*100/p_best1[2]))

print("C = (%.5f +/- %.5f) F err_percentuale = %.5f" % (p_best1[3]*1E+12,sqrt(cov1[3,3])*1E+12,sqrt(cov1[3,3])*100/p_best1[3]))

print("---------------------------")

fig1 = plt.figure(1, figsize=(9,7))

frame1a=fig1.add_axes((.1,.3,.7,.5))

# Grafico del fit

plt.errorbar(x1, y1, yerr=σ_y1, fmt='.', label='dati', capsize = 4)

_pts = np.linspace(x1[0], x1[-1], 1000)

plt.plot(_pts, fitfunc1(_pts, *p_best1), label='fit')

plt.title("Amplificazione ai capi di R")

plt.xlabel("frequenza [kHz]")

plt.ylabel(r"A = $V_{out}/V_{in}$")

plt.legend()

plt.tick_params(bottom = False)

plt.grid()

frame1a.set_xticklabels([])

# Grafico dei residui

frame1b=fig1.add_axes((.1,.1,.7,.19))

plt.errorbar(x1, res1, yerr = σ_y1, fmt ='.', capsize = 4)

plt.xlabel("frequenza [kHz]")

plt.ylabel(r"$A_{mis}-A_{fit}$")

plt.grid()

#salvataggio della file nella cartella, dpi = risoluzione immagine, bbox_inches = risolve problema di dimensione

#plt.savefig("name_of_file", dpi = 200, bbox_inches = "tight")

I tried several things like removing the bounds for the parameters but that gives me enormous errors, renaming the parameters (in case they conflicted with the name of the initial value) and varying the initial values to let the fit be more precise (however initial parameters should be fixed as I wrote them because those are values that were measured with particular devices). (R_tot-R_G) represents the resistance of our RLC circuit, L is the inductance, and C is the capacity. The only unknown value is the amplitude A of the curve. Thank you in advance!