I am not understanding something, maybe someone can shine some light on it.

I am doing image recognition in Python with torch (YOLOv5 2023-4-19 Python-3.9.13 torch-2.0.0+cu117 CUDA:0 (NVIDIA RTX A2000 8GB Laptop GPU, 8192MiB) on real time camera stream.

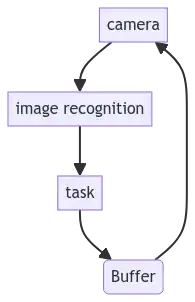

I need to have a stable frequency. So I implemented something like this:

Where my buffer allow me to set the frequency on my process. Lets say for example I do the loop in 120 milliseconds +- 10 milliseconds so I set my buffer to wait until 200 milliseconds.

As an example (I tried several variation) I make my buffer like, this:

import timeit

frame_delay = 0.1

main_time_buffer = timeit.default_timer()

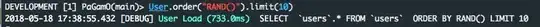

[LOOP]

current = timeit.default_timer()

if current - main_time_buffer < frame_delay:

time.sleep(frame_delay-(current - main_time_buffer))

main_time_buffer = timeit.default_timer()

Works, but then something strange happens with my image recognition task.

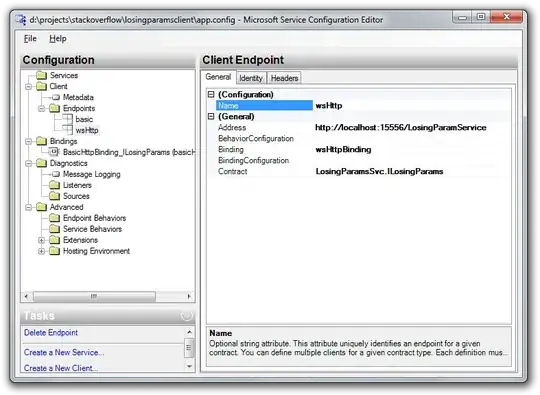

Here is the timing without a buffer:

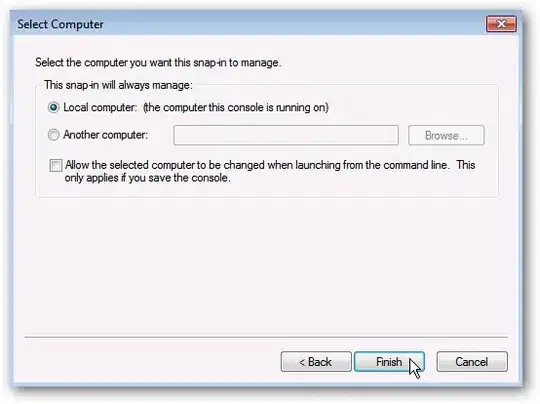

And here is the behavior with a buffer:

The buffer makes the classification slower, but also behaving in a very random way. (Note: the buffer do not match the classification because of the camera too)

I am very confused by this behavior... Why is the buffer impacting the time for the classification?

Is there a frequency inside of the GPU? Because I suppose I am pretty slow to hit that. Am I hitting some resonant state of the GPU?

Any suggestion would be appreciated, because I really don't get it here.

I need to process image from a camera at a precise frequency, so I implemented a time buffer to get a stable frequency du to variation in my my processing time depending of the number of object detected.