I am working on a project that involves solving a 2D bin packing problem using reinforcement learning (RL). I am facing a challenge with training the RL. It seems that the RL does not learn to improve its performance over time, and it often produces invalid or overlapping solutions. I am not sure what I am doing wrong or what I need to change in my approach. I am using stable-baselines 3. Here you can see the main code:

env = Area(30,30,{},30)

env.reset()

policy_kwargs = dict(activation_fn=th.nn.ReLU,

net_arch=dict(vf=[500, 5000,5000,500], pi=[500, 5000,5000,500]))

model = PPO('MlpPolicy', env,policy_kwargs=policy_kwargs, verbose=1,tensorboard_log=logdir,learning_rate=0.001)

start=440000

TIMESTEPS = 10000

iters=1

while True:

model.learn(total_timesteps=TIMESTEPS, reset_num_timesteps=False,tb_log_name=f'PPO',callback=TensorboardCallback())

model.env.reset()

model.save(r"model\PP0"+

"\Model_"+str(iters*TIMESTEPS+start))

iters+=1

This two segments are from the custom enviroment I created using stable-baselines 3.

def return_matrix_of_currenct_layout(self):

matrix=[]

for room in self.room_list:

matrix.append([room.id,room.x1,room.y1,room.x2,room.y2])

return np.array(matrix,dtype=np.int32)

def step(self,action):

#Room,x1,y2

done=False

room_1_index=action[0]

x1=action[1]

y1=action[2]

self.room_list[room_1_index].update(x1,y1)

observation=self.return_matrix_of_currenct_layout()

room_1=self.room_list[room_1_index]

reward=1

for room_2 in self.room_list:

if True==self.check_if_rooms_overlap(room_1,room_2):

reward-=0.30

for room_3 in self.room_list:

if True == self.check_if_rooms_overlap(room_2, room_3):

reward -= 0.030

if True==self.check_if_any_room_invalid():

reward-=0.5

if False==self.check_if_room_invalid(room_1):

reward+=0.30000

if False==self.check_if_any_room_invalid():

if False==self.check_if_any_room_overlaps():

done=True

reward+=10

self.reward=+reward

# print(self.reward)

return observation,reward,done, {}

Am i missing something obvious?

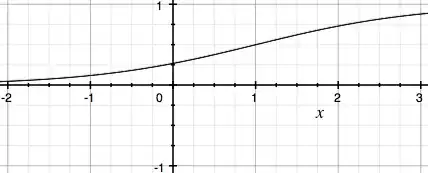

The tensorboard log looks like this:

Thanks in advance!

- I tried to change the reward function.

- I tried to change the size of the RL