The following code reads the same csv twice even though only one action is called

End to end runnable example:

import pandas as pd

import numpy as np

df1= pd.DataFrame(np.arange(1_000).reshape(-1,1))

df1.index = np.random.choice(range(10),size=1000)

df1.to_csv("./df1.csv",index_label = "index")

############################################################################

from pyspark.sql import SparkSession

from pyspark.sql import functions as F

from pyspark.sql.types import StructType, StringType, StructField

spark = SparkSession.builder.config("spark.sql.autoBroadcastJoinThreshold","-1").\

config("spark.sql.adaptive.enabled","false").getOrCreate()

schema = StructType([StructField('index', StringType(), True),

StructField('0', StringType(), True)])

df1 = spark.read.csv("./df1.csv", header=True, schema = schema)

df2 = df1.groupby("index").agg(F.mean("0"))

df3 = df1.join(df2,on='index')

df3.explain()

df3.count()

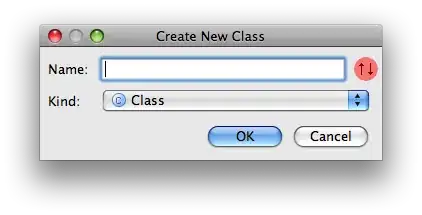

The sql tab in the web UI shows the following:

As you can see, the df1 file is read twice. Is this the expected behavior? Why is that happening? I have just one action so the same part of the pipeline should not run multiple times.

I have read the answer here. The question is almost the same, but in that question RDDs are used and I am using dataframes in pyspark API. In that question it is suggested that if multiple file scans are to be avoided then DataFrames API would help and this is the reason why DataFrama API exists in the first place

However, as it turns out, I am facing the exactly same issue with the DataFrames as well. It seems rather weird of spark, which is celebrated for its efficiency, to be this inefficient (Mostly I am just missing something and that is not a valid criticism :))