I'm using DataprocSubmitJobOperator in GCP Composer2(Airflow dags) and the jobs are failing in Composer v2.4.3, while the jobs are going through in the v2.2.5 clusters.

Error is as shown below:

[2023-05-05, 19:10:59 PDT] {dataproc.py:1953} INFO - Submitting job

[2023-05-05, 19:10:59 PDT] {base.py:71} INFO - Using connection ID 'google_cloud_default' for task execution.

[2023-05-05, 19:10:59 PDT] {credentials_provider.py:323} INFO - Getting connection using `google.auth.default()` since no key file is defined for hook.

[2023-05-05, 19:11:02 PDT] {local_task_job.py:159} INFO - Task exited with return code Negsignal.SIGKILL

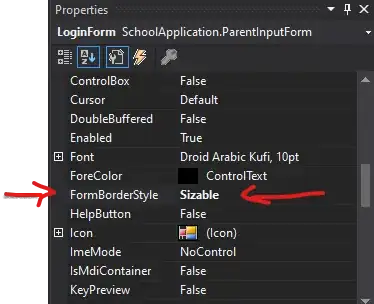

Both the clusters are small clusters, and have similar configurations (pls see screenshot attached)

Any ideas on how to fix this on v2.4.3 ?