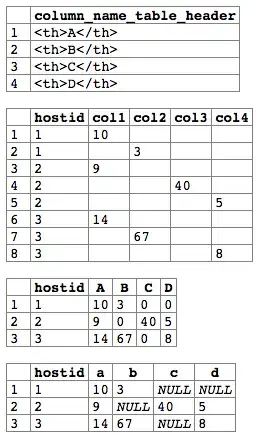

I have two distributions where the probability density from [-0.05, 0) is 0 and defined using interpolation for [0,1].

import matplotlib.pyplot as plt

import numpy as np

from scipy import signal

step = 1e-3

x = np.arange(-0.05, 1, step)

x_0minus = x[x<0]

x_0plus = x[x>0]

pdf1 = np.concatenate([np.repeat(0, len(x_0minus)), np.interp(x_0plus, [0, 0.08, 0.28], [0, 80, 0])])

pdf2 = np.concatenate([np.repeat(0, len(x_0minus)), np.interp(x_0plus, [0, 0.1, 0.3, 0.31], [60, 60, 60, 0])])

We plot these for reference.

plt.scatter(x = x, y = pdf1)

I don't understand why when I convolve these using fftconvolve, the resultant distribution has a non-zero probability density for values below 0. I'd like to remedy this without setting the range to be [-1,1], because that would be a computational waste in my actual use-case.

res = signal.fftconvolve(pdf1 / pdf1.sum(), pdf2 / pdf2.sum(), 'same')

plt.scatter(x = x, y = res)