I'm trying to perform feature selection on some spectroscopy data using SKLearn's RFECV function. I want to use a pipeline with PLSRegression as its last step, as the estimator for the RFECV function. However I'm getting different (clearly wrong) results when using PLSRegression in a pipeline, versus just on its own. Error details and minimum repeatable examples below.

- Imports and setup

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from sklearn.cross_decomposition import PLSRegression

from sklearn.pipeline import Pipeline

# Use Github data from NIRPY blog as sample data - using NIR spectra to predict peach brix values

df = pd.read_csv(r'https://raw.githubusercontent.com/nevernervous78/nirpyresearch/master/data/peach_spectra_brix.csv')

X = df.iloc[:,1:].values

y = df.iloc[:,0].values

- First, doing the RFECV with a normal PLSRegression object, to show what I'm expecting.

pls = PLSRegression(n_components=3)

selector = RFECV(pls,

step=1,

cv=5,

verbose=0,

n_jobs=2,

min_features_to_select=(2*np.shape(X)[0]), # Don't remove too many wavelengths

scoring='neg_root_mean_squared_error')

selector = selector.fit(X, y)

fig = plt.figure()

plt.plot(selector.ranking_)

plt.show()

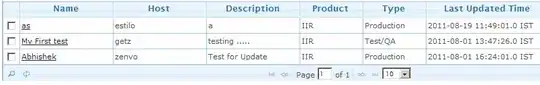

Which yields this plot, with all the rank=1 wavelengths being selected by the algorithm in different regions of the spectrum, as expected:

- However the real data I'm analyzing will be sent through a pipeline with various steps, the last of which is a PLSRegression object. So trying this with a typical SKLearn pipeline, with PLS as the only step for simplicity here:

pipe = Pipeline([('pls', PLSRegression(n_components=3))])

selector2 = RFECV(pipe,

step=1,

cv=5,

verbose=0,

n_jobs=2,

min_features_to_select=(2*np.shape(X)[0]),

scoring='neg_root_mean_squared_error')

selector2 = selector2.fit(X, y)

But this yields an error:

ValueError: when `importance_getter=='auto'`, the underlying estimator Pipeline should have `coef_` or

`feature_importances_` attribute. Either pass a fitted estimator to feature selector or call fit before

calling transform.

- So I found a potential workaround to give my Pipeline class a coef_ attribute, per best-found PCA estimator to be used as the estimator in RFECV :

class Mypipeline(Pipeline):

@property

def coef_(self):

return self._final_estimator.coef_

@property

def feature_importances_(self):

return self._final_estimator.feature_importances_

mypipe = Mypipeline([('pls', PLSRegression(n_components=3))])

selector3 = RFECV(mypipe,

step=1,

cv=5,

verbose=0,

n_jobs=-2,

min_features_to_select=(2*np.shape(X)[0]),

scoring='neg_root_mean_squared_error')

selector3 = selector3.fit(X, y)

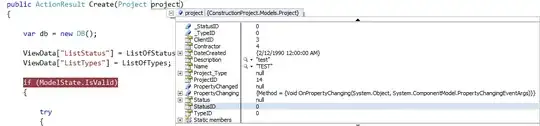

On the bright side, we've avoided the error. On the not so bright side, the wavelengths have been ranked sequentially in descending order so the last 2*np.shape(X)[0] features are used, and the rest ignored. The ranking plot looks like this:

Which is clearly wrong.

- My next attempt tried to make use of the

importance_getterparameter instead, as described in the docs for the RFECV function:

selector4 = RFECV(pipe,

step=1,

cv=5,

verbose=0,

n_jobs=-2,

min_features_to_select=(2*np.shape(X)[0]),

scoring='neg_root_mean_squared_error',

importance_getter=pipe.named_steps.pls.coef_)

selector4 = selector4.fit(X, y)

But of course pipe wasn't fitted yet so I get another error, AttributeError: 'PLSRegression' object has no attribute '_coef_'.

- Ok, maybe I need to fit pipe before using it with the selector?

pipe = Pipeline([('pls', PLSRegression(n_components=3))])

pipe.fit(X, y)

selector5 = RFECV(pipe,

step=1,

cv=5,

verbose=0,

n_jobs=-2,

min_features_to_select=(2*np.shape(X)[0]),

scoring='neg_root_mean_squared_error',

importance_getter=pipe.named_steps.pls.coef_)

selector5 = selector5.fit(X, y)

Nope, new error:

ValueError: `importance_getter` has to be a string or `callable`

- So maybe make importance_getter into a function?

def importance_getter(pipe):

return pipe.named_steps.pls.coef_

pipe = Pipeline([('pls', PLSRegression(n_components=3))])

pipe.fit(X, y)

selector6 = RFECV(pipe,

step=1,

cv=5,

verbose=0,

n_jobs=-2,

min_features_to_select=(2*np.shape(X)[0]),

scoring='neg_root_mean_squared_error',

importance_getter=importance_getter(pipe))

selector6 = selector6.fit(X, y)

Which returns the same error as (5). Long story short I need help figuring out how to do this properly!

Thanks