Idea

Calculate complete lookup tables that model the warp (for cv::remap).

Competing Ideas

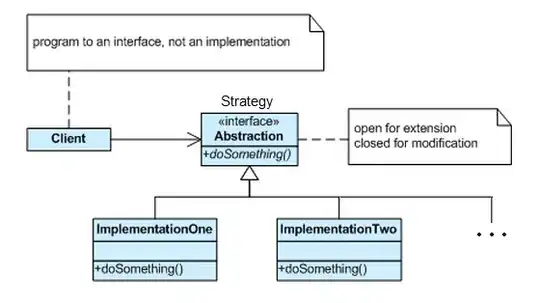

I chose a lookup table. Other approaches might estimate lens distortion coefficients, but those would then also need to estimate or assume a fixed pose for the reference pattern.

I looked into fitting a lens distortion model to matched features. It might be doable but (1) calibrateCamera didn't like the single image and kept producing junk (it won't take "guesses" for rvec and tvec) (2) I wasn't inclined to set up the equations for an explicit optimization that would include assumptions about the "3D pose" of the reference pattern, which is how one could form a lens model from this data.

One could set up some gradient descent to optimize lens distortion parameters, given some "experimental setup". I was also not inclined to set that up and come up with good ranges for the delta on each coefficient to use during GD, or to even come up with some kind of learning rate. Getting that to converge seemed more trouble than the optical flow approach below.

Approach

- initialize using homography from feature matching

- refine alignment using optical flow

Results

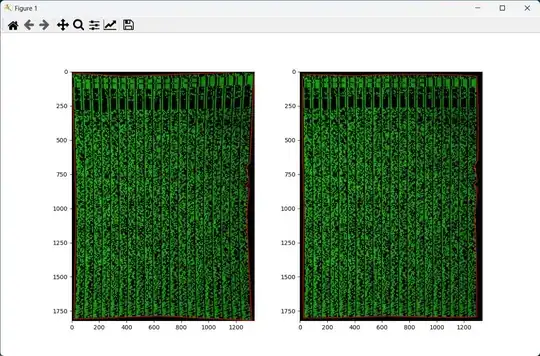

(1) unwarped, brightness adjusted a little (2) per-pixel difference to reference picture

Details

Feature Extraction, Matching, Homography

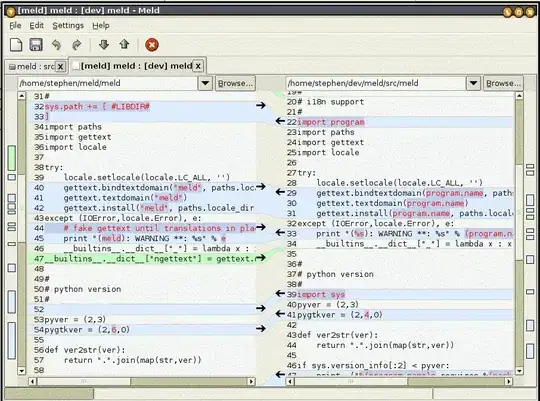

The standard example code. SIFT or AKAZE. For Lowe's ratio test, I chose a more severe ratio (0.3) to reduce the number of matches. Might not make any real difference to findHomography.

Oh, also, I took just the green channel of the warped image, and also stretched its values to 0.2 and 0.8 quantile levels.

# H mapping ref to warped image

[[ 3.80179 0.02005 -22.76224]

[ -0.08005 3.88306 13.52028]

[ -0.00008 0.00003 1. ]]

You see, in the center it's fairly good already, but only there.

Look-up Tables for cv::remap()

totalmap = np.empty((refheight, refwidth, 2), dtype=np.float32)

totalmap[:,:,1], totalmap[:,:,0] = np.indices((refheight, refwidth))

totalmap = cv.perspectiveTransform(totalmap, H)

I also scaled the reference image up by 3 (nearest neighbor) and wrapped that scaling into the homography matrix. H = H @ inv(np.diag((3.0, 3.0, 1.0))). Helps with looking at things.

Applying current lookup tables:

output = cv.remap(src=imwarped, map1=totalmap, map2=None, interpolation=cv.INTER_CUBIC)

Zero-th iteration (ref, diff, unwarped/output):

Optical Flow

dis = cv.DISOpticalFlow_create(cv.DISOPTICAL_FLOW_PRESET_MEDIUM)

Calculate flow:

flow = dis.calc(I0=imref, I1=output, flow=None)

Note that this is calculated using output, which results from using the current lookup table.

Magnitude of the first iteration of flow:

# applies the increment

totalmap += flow

# keeps the warp reasonably smooth

totalmap = cv.stackBlur(totalmap, (31,31))

first iteration of flow:

second iteration of flow:

Result

Note that the warp map only has reasonably valid values where the images actually have texture. Outside of the pattern, there is no texture, so the values there don't map sensibly.

Ideally, this cross-section would be a straight diagonal, mapping each X value in the reference space to an increasing X value from the warped image. On the borders, it can't determine this, so you get it leveling off. This is in part happening due to the lowpass (stackBlur), but really, there's no support for any sensible values there.

The lowpass also causes some warping inside of textured areas that are near the borders (to untextured area). This effect can be reduced if the last increment (totalmap += flow) isn't lowpassed, or lowpassed less severely than all the previous ones.

Result, Caveats

The result is an xymap suitable for cv::remap(). You can save and load it and apply it to whatever images you like. It'll remove the lens distortion and whatever other spatial effects.

Optical flow can do weird things, just warp the image in ways that aren't well supported by the data. That is one reason why I apply a lowpass, to keep it kinda straight. I also lowpass both images before passing them to optical flow calculation. That helps it not get caught on aliasing effects of the resampling.