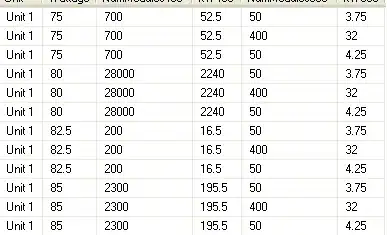

I am trying to understand the job, stage and task details shown in SparkUI for the following pyspark snippet

# Disabling adaptive execution to see 200 shuffle partitions show up

spark.conf.set('spark.sql.adaptive.enabled', 'false')

df = spark.createDataFrame(pd.DataFrame([[1, 2, 3],[4,5,6]], columns=['a','b','c']))

df.groupBy('a').agg({'b': 'sum'}).withColumnRenamed('sum(b)', 'b').orderBy(F.desc('b')).collect()

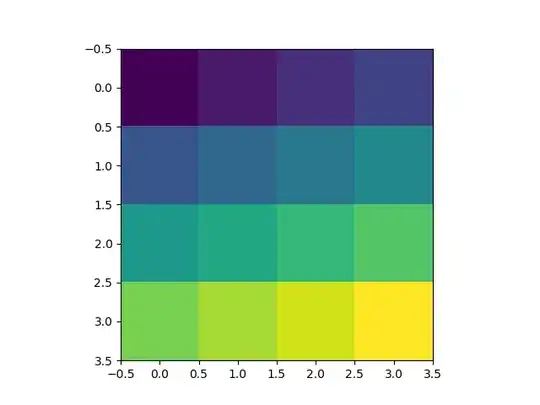

This is making sense as I am noticing two shuffle exchanges corresponding to group-by and order-by operations. I am guessing HashAggregate in the first block (before the first shuffle) is a local aggregation done by each task (like combiner in map-reduce). Is that correct?

For job & stage level details I have several questions.

- Why there are two jobs as there is only one action

collect?

- Stage-5 & 6 (job-3) appears to be transformation for

group-by. If that is the case then I can't make sense of Stage 7,8,9 (job-4) as I am seeing two more exchanges there. Am I not understanding it correctly?

- I am also expecting output of stage-6 to be fed into stage-8 instead

stage-7becoming the predecessor of stage-8. Also what is the role ofparallelize->mapPartitions...->in stage-7? What transformation (in pyspark snippet) does it correspond to? - Is it getting skipped because corresponding data is found in cache?

Any help will be really appreciated.