I'm new to Azure and Azure ML studio. I'm currently trying to bring a data processing pipeline I have built in local to cloud for a project I've been working on. I'd like to know if the "flow" I'm following is the right approach, and in case, a solution to the problem I'll explain below.

I have reached my current state of the work through the documentation, and through some good old "try-and-error" approach. My main problem is that I have create a job with a custom pipeline from a python script through the azure ML UI, but I don't know how to save the output file of this pipeline in a specific location, instead of the one provided by the system.

Down here the steps I've followed:

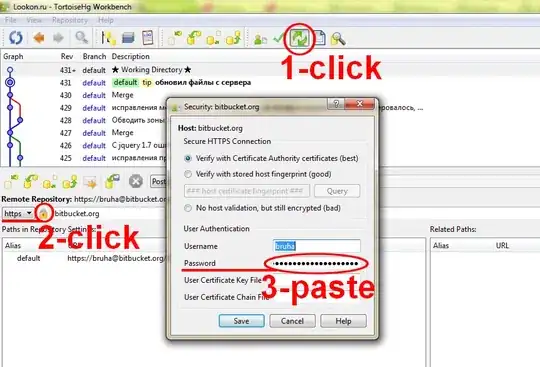

I'm setting up a job in azure ML, creating a job clicking on "create job(preview)"

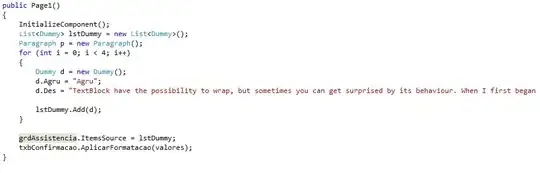

Here I proceed selecting a compute instance and a custom environment I built from my local experiment. Then, as shown in the picture below, I upload from a local folder the files that represent my pipeline process:

Then I create the file in input to my file "main.py" using the "create input" field as follow:

...and I do the same thing with the output section, creating the "path" to the output file:

everything works here, which means that I can read the file in input, and I can save the file in output. The main problem is that the output path is pointing into a blob storage which is not the one I'd like to use it. I'd like to save the file into the same blob storage and container where I am reading the file, but when I create the output in the step 4, I cannot specify the location, is built automatically by Azure ML.

Can someone tell me how to handle this output issue in order to save where I want a file? Is it possible to do it through the Azure ML UI, or do I have to resort to some code inserted inside my python script? If this is the case, can you provide such code?

Thank you so much for your time, I tried to be as complete as possible in the explaination.

Have a nice day