consider I have 2 classes of data and I am using sklearn for classification,

def cv_classif_wrapper(classifier, X, y, n_splits=5, random_state=42, verbose=0):

'''

cross validation wrapper

'''

cv = StratifiedKFold(n_splits=n_splits, shuffle=True,

random_state=random_state)

scores = cross_validate(classifier, X, y, cv=cv, scoring=[

'f1_weighted', 'accuracy', 'recall_weighted', 'precision_weighted'])

if verbose:

print(f"=====================")

print(f"Accuracy: {scores['test_accuracy'].mean():.3f} (+/- {scores['test_accuracy'].std()*2:.3f})")

print(f"Recall: {scores['test_recall_weighted'].mean():.3f} (+/- {scores['test_recall_weighted'].std()*2:.3f})")

print(f"Precision: {scores['test_precision_weighted'].mean():.3f} (+/- {scores['test_precision_weighted'].std()*2:.3f})")

print(f"F1: {scores['test_f1_weighted'].mean():.3f} (+/- {scores['test_f1_weighted'].std()*2:.3f})")

return scores

and I call it by

scores = cv_classif_wrapper(LogisticRegression(), Xs, y0, n_splits=5, verbose=1)

Then I calculate the confusion matrix with this:

model = LogisticRegression(random_state=42)

y_pred = cross_val_predict(model, Xs, y0, cv=5)

cm = sklearn.metrics.confusion_matrix(y0, y_pred)

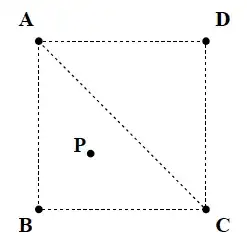

The question is I am getting 0.95 for F1 score but the confusion matrix is

Is this consistent with F1 score=0.95? Where is wrong if there is?

note that there is 35 subject in class 0 and 364 in class 1.

Accuracy: 0.952 (+/- 0.051)

Recall: 0.952 (+/- 0.051)

Precision: 0.948 (+/- 0.062)

F1: 0.947 (+/- 0.059)