I have a kusto query which returns all user's url, I need to take the userId from the url and only count the unique value (by userId).

What I already made is: using

| project userIdSection = split(parse_url(url).Path, "/")[-1] in the query to extract userId out. But there are a lot of duplicates, how can I only count the unique user Ids?

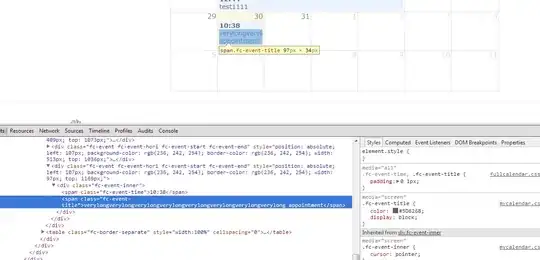

when I apply distinct on it, it throws the error:

union isfuzzy=true requests | where cloud_RoleName contains "my-app" | project timestamp, id, name, userIdSection = split(parse_url(url).Path, "/")[-1], success | distinct userIdSection

What I expected is, to only get the unique userId from the url section per user.

Example, currently I can only get a list of duplicate request per user who made 200+ requests.

With Peter's help, now I get a specific user visited more than 200 unique requests), but how to get all user whoever visited more than 200 unique requests? (userIdSection is the unique not customDimensions.userId.