I have a project with one API (httpTrigger) function and one queueTrigger.

When jobs are processing in the queueTrigger, the API becomes slow/unavailable. Probably my function only accepts one job simultaneously.

Not sure why. Must be a setting somewhere.

hosts.json:

{

"version": "2.0",

"extensions": {

"queues": {

"batchSize": 1,

"maxDequeueCount": 2,

"newBatchThreshold": 0,

"visibilityTimeout" : "00:01:00"

}

},

"logging": ...

"extensionBundle": ...

"functionTimeout": "00:10:00"

}

The batchSize is set to 1. I only want one job to process simultaneously. But this should not affect my API? The setting is only for the queues trigger?

functions.json for API:

{

"bindings": [

{

"authLevel": "function",

"type": "httpTrigger",

"direction": "in",

"name": "req",

"route": "trpc/{*segments}"

},

{

"type": "http",

"direction": "out",

"name": "$return"

}

],

"scriptFile": "../dist/api/index.js"

}

functions.json for queueTrigger:

{

"bindings": [

{

"name": "import",

"type": "queueTrigger",

"direction": "in",

"queueName": "process-job",

"connection": "AZURE_STORAGE_CONNECTION_STRING"

},

{

"type": "queue",

"direction": "out",

"name": "$return",

"queueName": "process-job",

"connection": "AZURE_STORAGE_CONNECTION_STRING"

}

],

"scriptFile": "../dist/process-job.js"

}

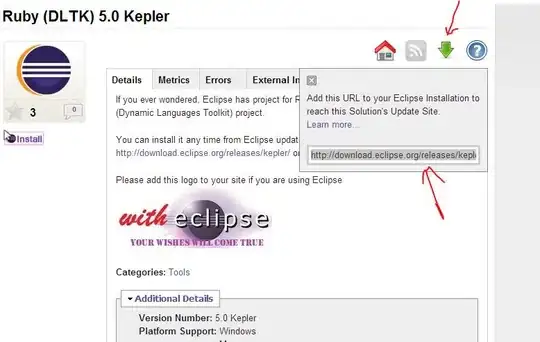

Other settings in azure that may be relevant:

FUNCTIONS_WORKER_PROCESS_COUNT = 4

Scale out options in azure (sorry about the swedish)

Update I tried to update maximum burst to 8. Tried to change to dynamicConcurrency.

No success.

Feeling is the jobs occupy 100% of the CPU and API then becomes slow/times out. Regardless of concurrency settings etc.