hi i have made an algorithm to detect the time taken for a wave from beginning of a trough to next through to calculate duration all separate waves but the through function keeps returning some peaks

the algorithm

import pandas as pd

import numpy as np

import peakutils

# Read the data from the CSV file

df = pd.read_csv('test.csv')

# Convert the first column to datetime format

df['Column1'] = pd.to_datetime(df['Column1'])

# Convert the second column to numeric type

df['Column2'] = df['Column2'].astype(int)

def through(arr, n, num, i, j):

# If num is smaller than the element

# on the left (if exists)

if (i >= 0 and arr[i] < num):

return False

# If num is smaller than the element

# on the right (if exists)

if (j < n and arr[j] < num):

return False

return True

# Function that returns true if num is

# smaller than both arr[i] and arr[j]

def isTrough(arr, n, num, i, j):

# If num is greater than the element

# on the left (if exists)

if (i >= 0 and arr[i] < num):

return False

# If num is greater than the element

# on the right (if exists)

if (j < n and arr[j] < num):

return False

return True

def printPeaksTroughs(arr, n):

print("Peaks : ", end = "")

# For every element

for i in range(n):

# If the current element is a peak

if (through(arr, n, arr[i], i - 1, i + 1)):

# print(arr[i], end = " ")

peaks_info = np.vstack((arr[i],arr2[i])).T

print(peaks_info)

print()

print("Troughs : ", end = "")

# For every element

for i in range(n):

# If the current element is a trough

if (isTrough(arr, n, arr[i], i - 1, i + 1)):

print(arr[i], end = " ")

# Driver code

arr = df['Column2']

arr2=df['Column1']

# arr = [5, 10, 5, 7, 4, 3, 5]

# arr2 = [1,2,3,4,5,6,7]

n = len(arr)

printPeaksTroughs(arr, n)

this is the result i am getting

[[87 Timestamp('2023-03-14 14:20:08')]]

[[86 Timestamp('2023-03-14 14:22:23')]]

[[86 Timestamp('2023-03-14 14:23:30')]]

[[86 Timestamp('2023-03-14 14:24:38')]]

[[262 Timestamp('2023-03-14 14:34:46')]]

[[262 Timestamp('2023-03-14 14:35:54')]]

[[91 Timestamp('2023-03-14 14:56:09')]]

[[262 Timestamp('2023-03-14 15:07:25')]]

[[262 Timestamp('2023-03-14 15:08:32')]]

[[262 Timestamp('2023-03-14 15:09:40')]]

[[89 Timestamp('2023-03-14 15:31:03')]]

[[86 Timestamp('2023-03-14 15:35:33')]]

[[86 Timestamp('2023-03-14 15:36:41')]]

[[86 Timestamp('2023-03-14 15:37:49')]]

[[262 Timestamp('2023-03-14 15:49:04')]]

[[95 Timestamp('2023-03-14 16:07:05')]]

[[262 Timestamp('2023-03-14 16:17:13')]]

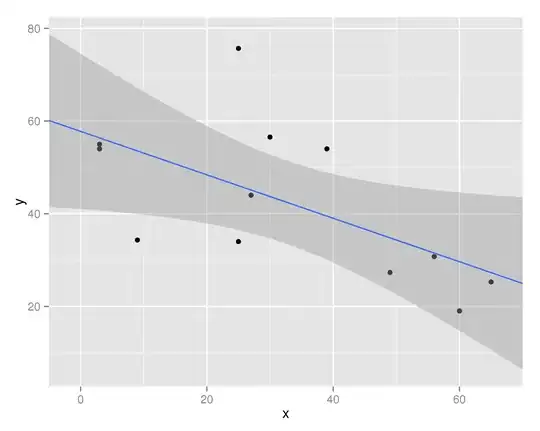

as you can see it some times picks up the value 262 which is the highest value in the data set the graph of the data on same algorithm also detects these peaks as throughs

here the green arrows show the through and red ones are for peaks

i want the data from 1st through to 2nd through to be set as 1st wave then the through at the end of 1st wave is regarded as start of 2nd wave for example

this is the data in written form since i cant upload the csv file. these are only 1st few peaks

Column1,Column2

2023-03-14 14:00:59.0,195.80

2023-03-14 14:02:06.0,174.20

2023-03-14 14:03:14.0,156.76

2023-03-14 14:04:21.0,142.36

2023-03-14 14:05:29.0,131.00

2023-03-14 14:06:37.0,122.00

2023-03-14 14:07:44.0,114.91

2023-03-14 14:08:52.0,109.18

2023-03-14 14:10:00.0,104.56

2023-03-14 14:11:07.0,100.74

2023-03-14 14:12:15.0,97.93

2023-03-14 14:13:22.0,95.45

2023-03-14 14:14:30.0,93.43

2023-03-14 14:15:37.0,91.85

2023-03-14 14:16:45.0,90.73

2023-03-14 14:17:53.0,89.49

2023-03-14 14:19:00.0,88.59

2023-03-14 14:20:08.0,87.91

2023-03-14 14:21:15.0,87.13

2023-03-14 14:22:23.0,86.68

2023-03-14 14:23:30.0,86.23

2023-03-14 14:24:38.0,86.23

2023-03-14 14:25:45.0,108.61

2023-03-14 14:26:53.0,142.70

2023-03-14 14:28:01.0,175.89

2023-03-14 14:29:08.0,203.79

2023-03-14 14:30:16.0,225.84

2023-03-14 14:31:23.0,241.25

2023-03-14 14:32:31.0,253.29

2023-03-14 14:33:39.0,262.18

2023-03-14 14:34:46.0,262.29

2023-03-14 14:35:54.0,262.29

2023-03-14 14:37:01.0,262.29

2023-03-14 14:38:09.0,260.83

2023-03-14 14:39:16.0,235.51

2023-03-14 14:40:24.0,208.85

2023-03-14 14:41:31.0,185.45

2023-03-14 14:42:39.0,166.33

2023-03-14 14:43:46.0,150.35

2023-03-14 14:44:54.0,137.41

2023-03-14 14:46:01.0,127.06

2023-03-14 14:47:09.0,118.96

2023-03-14 14:48:17.0,112.55

2023-03-14 14:49:24.0,107.15

2023-03-14 14:50:32.0,103.10

2023-03-14 14:51:39.0,99.61

2023-03-14 14:52:47.0,96.80

2023-03-14 14:53:54.0,94.55

2023-03-14 14:55:02.0,92.75

2023-03-14 14:56:09.0,91.18

2023-03-14 14:57:17.0,97.70

2023-03-14 14:58:24.0,127.06

2023-03-14 14:59:32.0,161.04

2023-03-14 15:00:39.0,190.85

2023-03-14 15:01:47.0,214.81

2023-03-14 15:02:55.0,233.38

2023-03-14 15:04:02.0,247.21

2023-03-14 15:05:10.0,256.66

2023-03-14 15:06:17.0,262.29

2023-03-14 15:07:25.0,262.29

2023-03-14 15:08:32.0,262.29

2023-03-14 15:09:40.0,262.29

2023-03-14 15:10:47.0,262.29

2023-03-14 15:11:55.0,246.31

2023-03-14 15:13:02.0,219.65

2023-03-14 15:14:10.0,194.56

2023-03-14 15:15:17.0,173.53

2023-03-14 15:16:25.0,156.43

2023-03-14 15:17:33.0,142.03

2023-03-14 15:18:40.0,130.78

2023-03-14 15:19:48.0,121.89

2023-03-14 15:20:55.0,114.80

2023-03-14 15:22:03.0,109.18

2023-03-14 15:23:10.0,104.68

2023-03-14 15:24:18.0,101.19

2023-03-14 15:25:25.0,98.26

2023-03-14 15:26:33.0,95.90

2023-03-14 15:27:41.0,93.88

2023-03-14 15:28:48.0,92.41

2023-03-14 15:29:56.0,91.06

2023-03-14 15:31:03.0,89.94

2023-03-14 15:32:11.0,89.04

2023-03-14 15:33:18.0,88.03

2023-03-14 15:34:26.0,87.35

2023-03-14 15:35:33.0,86.79

2023-03-14 15:36:41.0,86.34

2023-03-14 15:37:49.0,86.34

2023-03-14 15:38:56.0,108.39

2023-03-14 15:40:04.0,142.59

2023-03-14 15:41:11.0,175.33

2023-03-14 15:42:19.0,203.00

2023-03-14 15:43:26.0,224.94

2023-03-14 15:44:34.0,240.91

2023-03-14 15:45:41.0,252.39

2023-03-14 15:46:49.0,260.71

2023-03-14 15:47:56.0,262.29

2023-03-14 15:49:04.0,262.29

2023-03-14 15:50:11.0,262.29

2023-03-14 15:51:19.0,259.14

2023-03-14 15:52:26.0,233.60

2023-03-14 15:53:34.0,207.39

2023-03-14 15:54:41.0,183.99

2023-03-14 15:55:49.0,164.98

2023-03-14 15:56:57.0,149.00

2023-03-14 15:58:04.0,136.06

2023-03-14 15:59:12.0,125.94

2023-03-14 16:00:19.0,117.84

2023-03-14 16:01:27.0,111.43

2023-03-14 16:02:35.0,106.25

2023-03-14 16:03:42.0,102.31

2023-03-14 16:04:50.0,98.94

2023-03-14 16:05:57.0,96.35

2023-03-14 16:07:05.0,95.34