I performed a Mantel regression test between two distance matrices, using residuals to control for a third variable. The Mantel test shows a significant relationship between my two variables (residualsA vs residualsB). However, when I plot residualsA vs residualsB, it is not entirely clear whether the relationship is indeed linear or whether it is convex (U-shape).

How can I test which one (linear or convex) fits the pattern best?

I assume the convex means a quadratic function? Or exponential?

Here is a reproducible example using the iris data.

data(iris)

library("ggplot2")

library("reshape2")

library("vegan")

# distances

dist.SepL <- as.matrix(vegdist(iris$Sepal.Length))

dist.PetL <- as.matrix(vegdist(iris$Petal.Length))

dist.SepW <- as.matrix(vegdist(iris$Sepal.Width))

# A.

# regress Sepal.Length distance against Sepal.Width distance.

vegan::mantel(xdis=dist.SepW, ydis=dist.SepL, method="spearman", permutations=99)

#--> Mantel statistic r: 0.03639, Significance: 0.11

# save residuals.

resA <- mantel.residuals(dist.SepL, dist.SepW)

resA.df <- as.data.frame(melt(as.matrix(resA$residuals))) #residuals as dataframe

# B.

# regress Petal.Length distance against Sepal.Width distance.

vegan::mantel(xdis=dist.SepW, ydis=dist.PetL, method="spearman", permutations=99)

#-->Mantel statistic r: 0.2179, Significance: 0.01

# save residuals.

resB <- mantel.residuals(dist.PetL, dist.SepW); resB$residuals

resB.df <- as.data.frame(melt(as.matrix(resB$residuals))) #residuals as dataframe

# AB.

# regress residual Sepal.Length distance (A.) against residual Petal.Length distance (B.)

vegan::mantel(xdis=resB$residuals, ydis=resA$residuals, method="spearman", permutations=99)

#-->Mantel statistic r: 0.6245, Significance: 0.01

# save residuals.

res.AB <- cbind(resA.df, resB.df$value) #column-bind residuals A and residuals B

colnames(res.AB) <- c("var1","var2","resA","resB") #rename variables

res.AB <- res.AB[100:200,] #subset to get a smaller df, for this example

#(otherwise there are 22500 obs, meaning a slow calculation time and a messy plot)

I used geom_smooth in ggplot, to visually compare linear to loess regression lines. Since the red SE area (linear) is smaller than the blue SE area (loess), I would say that the linear relationship fits the pattern best. Does it make sense?

ggplot(res.AB, aes(y=resA, x=resB)) +

geom_point(size=2) +

geom_smooth(method="glm",formula = y ~ x, se=T, col="red", fill="#fadcdd",linetype="solid") +

geom_smooth(method="loess", se=T, col="blue", fill="lightblue", linetype="solid") +

theme_bw()

#red is linear

#blue is quadratic

But of course, this is not a proper test!

I had a look online, and this is my first attempt.

# linear model

mlin <- lm(resA~resB, data=res.AB)

# quadratic model

res.AB$resB2 <- res.AB$resB^2

mquad <- lm(resA~resB2, data=res.AB)

#create sequence of residual values

resvalues <- seq(-0.5, 0.5, length=64)

#create list of predicted values using the models

predictlin <- predict(mlin,list(resB=resvalues))

predictquad <- predict(mquad,list(resB=resvalues, resB2=resvalues^2))

predictlog <- predict(mlog,list(resB=resvalues))

# adjusted R-squared of each model

> summary(mlin)$adj.r.squared

[1] 0.6900567

> summary(mquad)$adj.r.squared

[1] 0.4382747

According to this, the linear model explains 69% of the variance, whereas quadratic model explains only 43.8%. Meaning that the linear model is better than the quadratic one. Is that correct?

The main problem is that I used a Mantel test, and not a LM. So, I am not sure... does this approach make sense?

In addition, the quadratic function (blue) looks quite different from the previous one.

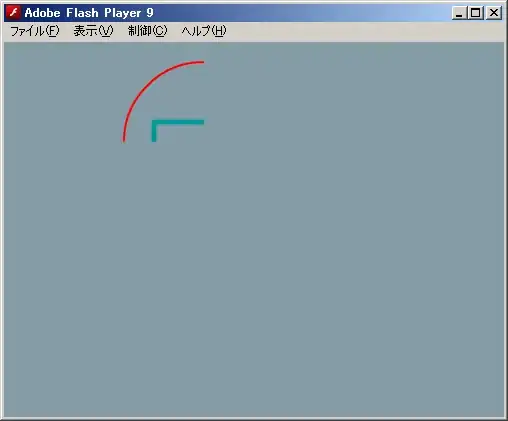

#create scatterplot of original data values

plot(res.AB$resB, res.AB$resA, pch=19, cex=0.5)

#add predicted lines based on quadratic regression model

lines(resvalues, predictquad, col='blue')

lines(resvalues, predictlin, col='red')

#red is linear

#blue is quadratic

I had the idea of using AIC (Akaike information criterion). But GLM doesn't allow negative values (like some of my residual values). Therefore, I transformed all my residuals to positive values simply by adding a number allowing this (residuals+1). After this transformation, I run GLM models with log and sqrt links. I don't know... how to get a quadratic GLM?

mlog <- glm((resA+1)~(resB+1), data=res.AB, family=poisson(link="log"))

msqrt <- glm((resA+1)~(resB+1), data=res.AB, family=poisson(link="sqrt"))

predictlog <- predict(mlog,list(resB=resvalues))

as.data.frame(AIC(mlin, mquad, mlog, msqrt))

as.data.frame(AIC(mlog, msqrt))

df AIC

mlin 3 -397.979

mquad 3 -337.922

mlog 2 Inf

msqrt 2 Inf

AIC values are:

linear: -397.979

quadratic: -337.922

logarithmic: Inf

squareroot: Inf

So, this approach is also not working.

How can I test whether a linear relatioship fits best my observed pattern than a convex relationship (or any other relationship)?

Thank you!

EDIT:

I came up with different methods to check for the goodness-of-fit of the linear model vs. the "convex model". However, I still don't know:

- what model (quadratic, exponential or something else) actually represents a convex (concave up) relationship

- what method is the most appropriate and/or accurate

Any reply to these questions would be highly appreciated!

Here is the code (sorry for its length)... maybe it can help someone else:

## fit models =============================================================================================

# Fit the null model

model_null <- lm(resA ~ 1, data = res.AB)

# Fit the linear model

model_lin <- lm(resA ~ resB, data = res.AB)

# Fit the quadratic model

model_quad <- lm(resA ~ resB + I(resB^2), data = res.AB)

model_quad <- lm(resA~poly(resB, degree = 2), data=res.AB) #idem

quad_model <- nls(resA ~ a * resB^2 + b * resB + c, data = res.AB, start = list(a = 1, b = 1, c = 1)) #with nls

# Fit the cubic model

model_cub <- lm(resA ~ resB + I(resB^3), data = res.AB)

cubic_model <- nls(resA ~ a + b*resB + c*resB^2 + d*resB^3,data = res.AB, #with nls

start = list(a = 1, b = 1, c = 1, d = 1))

# Fit an exponential model (intercept at zero)

exp_model <- nls(resA ~ a * exp(b * resB), data = res.AB, start = list(a = 1, b = 0.01)) #with nls

# Fit an exponential model (random intercept)

res.AB$id <- seq(1, 64, length=64)

exp_model <- nls(resA ~ exp(a + b * resB), #with nls

data = res.AB,

start = list(a = rnorm(1), b = rnorm(1)),

trace = TRUE)

# create a list of these models

model.list <- list(null = model_null, linear = model_lin, quadratic = model_quad, quadratic.nls=quad_model,

cubic=model_cub, exponential=exp_model)

lapply(model.list, summary)

# List of residuals

resid(model_lin)

# density plot

plot(density(resid(model_lin))) #unsure how to interpret that

hist(model_lin$residuals, main="Histogram of Residuals", xlab = "bf residuals")

## residual plot =============================================================================================

# points randomly scattered around x=0 -> appropriate model

# curved pattern -> LM captures the trend of some data points better than that of others -> consider another model (not linear model)

plot(res.AB$resB, model_lin$residuals,

ylab = "linear model residuals", xlab="independent variable"); abline(h=0, col="black", lwd=1, lty=2)

## visual plot =============================================================================================

#create sequence of residual values

resvalues <- seq(-0.5, 0.5, length=64)

#create list of predicted values using the models

predictlin <- predict(model_lin,list(resB=resvalues))

res.AB$resB2 <- res.AB$resB^2

predictquad <- predict(model_quad,list(resB=resvalues, resB2=resvalues^2))

res.AB$resB3 <- res.AB$resB^3

predictcub <- predict(model_cub,list(resB=resvalues, resB4=resvalues^4))

res.AB$resBexp <- exp(res.AB$resB)

predictexp <- predict(exp_model,list(resB=resvalues, resBexp=exp(resvalues)))

#create scatterplot of original data values

plot(res.AB$resB, res.AB$resA, pch=19, cex=0.9)

#add predicted lines based on quadratic regression model

lines(resvalues, predictquad, col='blue')

lines(resvalues, predictlin, col='red')

lines(resvalues, predictcub, col='darkgreen')

lines(resvalues, predictexp, col='orange')

## residuals vs fitted plot (1st plot of the sequence)

plot(model_lin)

plot(model_quad)

plot(model_cub)

plot(exp_model)

## R-squared =============================================================================================

# higher R-squared value indicates a better fit

sapply(model.list,

function(x) {

summary(x)$r.squared })

## AIC =============================================================================================

# lower AIC value indicates a better model fit.

sapply(model.list, AIC)

as.data.frame(AIC(model_null, model_lin, model_quad)) #idem

## MAE =============================================================================================

# lower MAE value indicates a better model fit.

sapply(model.list, function(x) mean(abs(x$residuals)))

## MSE =============================================================================================

# lower MSE value indicates a better model fit.

sapply(model.list, function(model) {

residuals <- residuals(model)

mean(residuals^2)})

## ANOVA =============================================================================================

# smaller RSS indicates a better fit ??

# if p-value <0.05, we can reject the null hypothesis that linear model is a better fit

anova(model_lin, model_quad)

## Ramsey RESET test =============================================================================================

# this test does not assumes that the quadratic model is nested within the linear model

library("car")

linearHypothesis(model_quad, c("I(resB^2) = 0"), test = "F")

anova_result <- sapply(model.list, anova)

print(anova_result)

## stepAIC =============================================================================================

# Fit all possible models with up to quadratic terms

all_models <- lm(resA ~ I(resB^2), data = res.AB)

# Use stepAIC to perform model selection and find the best-fitting model

best_model <- MASS::stepAIC(all_models, direction = "both")

# Print the summary of the best-fitting model

summary(best_model)

## Pearson =============================================================================================

#Pearson's chi-squared test for goodness of fit.

# Calculate the expected values

expected <- predict(model_lin)

# Divide the data into 10 groups

res.AB$group <- cut(expected, breaks = 10)

# Calculate the observed frequencies in each group

observed <- table(res.AB$group, res.AB$resA > 0)

# Perform the Pearson's chi-squared test for goodness of fit

chisq.test(observed)