I have a c# script and a shader script that is supposed to create two game objects and discard pixels of the second game object based on whether or not the b value of the pixel as shown in a camera's render texture is equal to a b value previously set and returned in the shader script of that game object. C#

private void Start() {

//Create two slime game objects

//Set one of their layers to depth

//Set one of their shaders that is applied to the material to Blend mode no pass

//Material.SetFloat(_Depth) -> for that specific material

//Graphics.Blit for that specific render texture(game object sprite itself)

//The shader pass should return rgba for each pixel where r is passed depth.

//However, it must only do so for pixels that constitute the game object, not background.

//

//Review Instantiating game objects

Debug.Log("Start called.");

GameObject depthSlime1 = Instantiate(slime);

Material depthMaterial1 = depthSlime1.GetComponent<Renderer>().material;

depthMaterial1.renderQueue = 0;

depthMaterial1.SetFloat("_Depth", 1);

Graphics.Blit(null, null, depthMaterial1, 1);

GameObject depthSlime2 = Instantiate(slime2);

Material depthMaterial2 = depthSlime2.GetComponent<Renderer>().material;

depthMaterial2.renderQueue = 1;

depthMaterial2.SetFloat("_Depth", 2);

Graphics.Blit(null, null, depthMaterial2, 1);

//After this Blit, shader successfully writes 2 to the b value of the rgba

//of the pixels of depthSlime2

//However, it does not seem to write the same b value in the pixel of depthSlime2

//in depth camera target texture

//I have figured that an object with lower renderQueue will overwrite pixels of

//an object with higher render queue.

//Experiment if it still works when I Blit arbitrary depth values to alpha.

//It works!

//Sample alpha and see if it matches _Depth for purple slime

depthMaterial2.SetVector("_CenterPos", depthSlime2.transform.position);

//Did depthCameraRender change at this point?

depthMaterial2.SetTexture("_DepthTex", depthCameraRender);

Graphics.Blit(depthCameraRender, null, depthMaterial2, 2);

}

Shader

Shader "Unlit/TestShader1"

{

Properties

{

_MainTex ("Texture", 2D) = "" {}

_Depth ("Depth", Float) = 0

_CenterPos ("Center Position of this Game Object", Vector) = (0,0,0,0)

_DepthTex ("Texture", 2D) = "" {}

}

SubShader

{

Tags { "RenderType"="Transparent" } //"QUEUE"="Transparent" } //"RenderType"="Transparent" }

LOD 100

//Cull Front

Blend SrcAlpha OneMinusSrcAlpha

Pass

{

CGPROGRAM

#pragma vertex vert

#pragma fragment frag

// make fog work

//#pragma multi_compile_fog

#include "UnityCG.cginc"

struct appdata

{

float4 vertex : POSITION;

float2 uv : TEXCOORD0;

};

struct v2f

{

float2 uv : TEXCOORD0;

//UNITY_FOG_COORDS(1)

float4 vertex : SV_POSITION;

};

sampler2D _MainTex;

float4 _MainTex_ST;

float _Depth;

v2f vert (appdata v)

{

v2f o;

o.vertex = UnityObjectToClipPos(v.vertex);

o.uv = TRANSFORM_TEX(v.uv, _MainTex);

//UNITY_TRANSFER_FOG(o,o.vertex);

return o;

}

float4 frag (v2f i) : SV_Target

{

// sample the texture

float4 col = tex2D(_MainTex, i.uv);

// apply fog

//UNITY_APPLY_FOG(i.fogCoord, col);

//col.a = 0.5;

if(_Depth > 0) {

//Checking if this shader is part of depth storing game object

col.a = _Depth;

}

return col;

}

ENDCG

}

Pass

{

CGPROGRAM

#pragma vertex vert

#pragma fragment frag

// make fog work

//#pragma multi_compile_fog

#include "UnityCG.cginc"

struct appdata

{

float4 vertex : POSITION;

float2 uv : TEXCOORD0;

};

struct v2f

{

float2 uv : TEXCOORD0;

//UNITY_FOG_COORDS(1)

float4 vertex : SV_POSITION;

};

sampler2D _MainTex;

float4 _MainTex_ST;

float _Depth;

v2f vert (appdata v)

{

v2f o;

o.vertex = UnityObjectToClipPos(v.vertex);

o.uv = TRANSFORM_TEX(v.uv, _MainTex);

//UNITY_TRANSFER_FOG(o,o.vertex);

return o;

}

fixed4 frag (v2f i) : SV_TARGET

{

// sample the texture

fixed4 col = tex2D(_MainTex, i.uv);

// apply fog

//UNITY_APPLY_FOG(i.fogCoord, col);

//col.a = 0.5;

if(_Depth == 2) {

//This block definitely runs!

col.b = _Depth / 255;

}

return col;

}

ENDCG

}

Pass

{

CGPROGRAM

#pragma vertex vert

#pragma fragment frag

// make fog work

//#pragma multi_compile_fog

#include "UnityCG.cginc"

struct appdata

{

float4 vertex : POSITION;

float2 uv : TEXCOORD0;

};

struct v2f

{

float2 uv : TEXCOORD0;

//UNITY_FOG_COORDS(1)

float4 vertex : SV_POSITION;

};

sampler2D _MainTex;

float4 _MainTex_ST;

sampler2D _DepthTex;

float4 _DepthTex_ST;

float4 _CenterPos;

float _Depth;

v2f vert (appdata v)

{

v2f o;

o.vertex = UnityObjectToClipPos(v.vertex);

o.uv = TRANSFORM_TEX(v.uv, _MainTex);

//UNITY_TRANSFER_FOG(o,o.vertex);

return o;

}

float4 frag (v2f i) : SV_TARGET

{

// Get the screen position and UV coordinates

float4 screenPos = UnityObjectToClipPos(i.vertex);

//float2 uv = screenPos.xy / screenPos.w * 0.5 + 0.5;

float2 uv = i.uv * 0.25;

// sample the texture

float4 col = tex2D(_MainTex, i.uv);

//_MainTex = texture of depthSlime2

// apply fog

//UNITY_APPLY_FOG(i.fogCoord, col);

//col.a = 0.5;

//_CenterPos.xy must also be in translated uv

//ComputeScreenPos(_CenterPos).xy = Not quite

//The code below does not seem to run.

//Even when changing the material shader's return color,

//This does not seem to change the target texture(rendered image)

//of the depth camera.

//DepthTex = depth camera target texture

if(tex2D(_DepthTex, uv + ComputeScreenPos(_CenterPos).xy).b == 2 / 255) {

//This block does run but instead of discarding pixels from depthSlime2

//It turns them yellow.

discard;

}

return col;

}

ENDCG

}

}

}

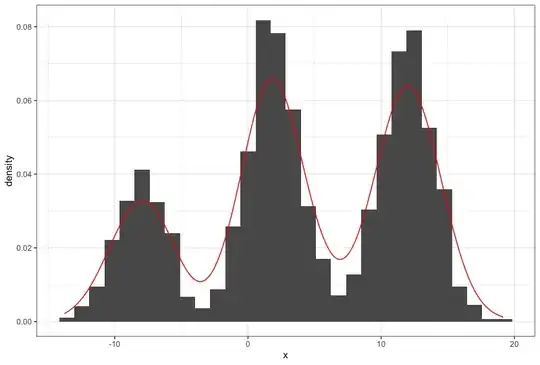

Increasingly lower render queues will be set for objects that are supposed to overwrite pixels of another game object when the 2d orthographic camera creates a render texture. My question is this: Why doesn't the shader script properly discard the leftover pixels of the larger game object that is being partially covered by another game object? It is not discarding any non-covered pixels. Instead, it looks like this:

With discarding conditional block commented:

It is incredibly weird behavior, since when I comment out the conditional block that does the discarding, the camera's render texture changes, proving that the conditional block at least runs. If that is proven, that means _Depth is properly encoded as 2 / 255 to each b value of each pixel that constitute depthSlime2 in the camera's render texture. Then why isn't the shader script discarding the non-covered pixels??

A deeper question would be if I apply a shader to a material and attach that material to a 2d game object, does the camera update its render texture(target texture) to match the changes made by the shader script as soon as the shader script writes to the pixels of the game object? Or does it keep the original render texture ignoring all changes made by the shader script to the game object? It is definitely not the latter, because I've seen some visual changes in the camera's render texture. So it must be the case that the camera, when creating its render texture, does not fully respect the _Depth value encoded as each pixel's b value made by the shader script.