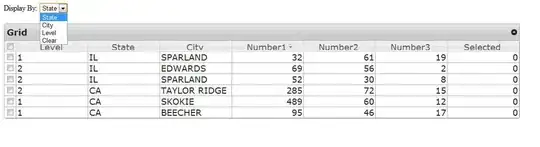

I want to make a LSTM model able to use is own forcast as one of the inputs feature, in this way adding values of this feature but learn to deal with it own error too. A king of partial auto-regression model.

Note: for the ones who like all details, the last feature are T-1 and the forecast is the estimation of T-0.

My strategy:

For trainning this model I create inputs of timesteps size bigger than require to be able to slice my data with a moving window. In this way I can update the next input slice with my previous forecast. Let's check the sketch to simplify the explaination.

first, this is a Lstm model with a final dense to output one time step forecast.

Here the shape of innitial data:

Slicing data to feed the model for the first forecast time step

(darker colors=use on this time step):

After first forecast, the window moove of one time step.

On the last feature, I insert the previous forecast at the last time step for the next forecasting operation:

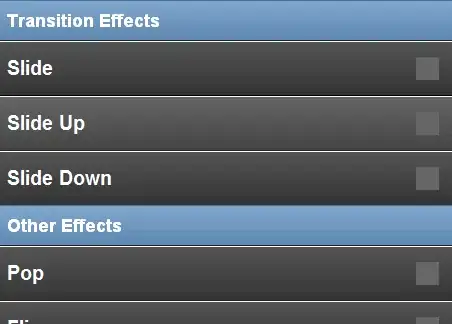

Now the code I build with some comment, I put here only the call function of my subclassing model. If require ask me for more:

def call(self, inputs, training=True):

from tensorflow.python.ops.numpy_ops import np_config

np_config.enable_numpy_behavior()

# Use a TensorArray to capture dynamically unrolled outputs.

predictions = []

# LSTM last feature (the one to forecast) is splitted from other lstm feature

# feature to update from previous forecast

last_feature_input = inputs[1] # the last one

# all other features

lstm_input = inputs[0]

# inputs to concat with lstm output later inside the model

att_input = inputs[2]

# Run prediction steps by step.

for i in range(0, self.num_timesteps_out):

# after the first forecast run

if i > 0:

# slice the new window - one step

app_input = tf.slice(last_feature_input, [0,1], [tf.shape(last_feature_input)[0], tf.shape(last_feature_input)[1]-1])

# add the previous forecast as the last time step to complete the full window

last_feature_input = tf.keras.layers.Concatenate(axis=1)([last_feature_input, prediction.reshape(1, -1)])

# join all lstm feature with the last one to feed lstm

last_feature_input = tf.keras.layers.Reshape((10, 1))(last_feature_input)

input_chunk = tf.slice(lstm_input, [0, i, 0], [tf.shape(lstm_input)[0], self.num_timesteps_in, tf.shape(lstm_input)[2]])

input_chunk = tf.keras.layers.Concatenate(axis=1)([input_chunk, last_feature_input])

# start model

extraction_info = tf.keras.layers.LSTM(240, # activation='softsign'

kernel_initializer=tf.keras.initializers.glorot_uniform(),

return_sequences=False, stateful=False)(input_chunk)

# Merge lstm output to att_input for the las stage of the model

merged_input = self.Concatenate([extraction_info, att_input])

prediction = self.Dense_out(merged_input)

# Add the prediction to the final output.

predictions.append(prediction)

# predictions.shape => (time, batch, features)

predictions = tf.stack(predictions)

# predictions.shape => (batch, time, features)

predictions = tf.transpose(predictions, [1, 0])

return predictions

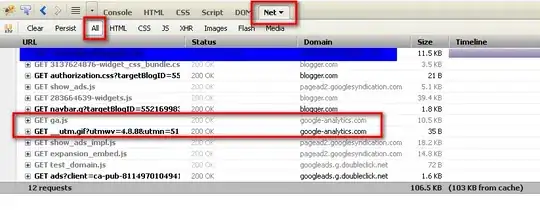

Actual code error:

ValueError: Shape must be rank 2 but is rank 3 for '{{node feed_back/Slice_1}} = Slice[Index=DT_INT32, T=DT_FLOAT](feed_back/reshape/Reshape, feed_back/Slice_1/begin, feed_back/Slice_1/size)' with input shapes: [?,10,1], [2], [2].

Call arguments received by layer "feed_back" " f"(type FeedBack):

• inputs=('tf.Tensor(shape=(None, 15, 2), dtype=float32)', 'tf.Tensor(shape=(None, 10), dtype=float32)', 'tf.Tensor(shape=(None, 17), dtype=float32)')

• training=True