I have done the following steps as inputs to the problem:

- trained a MNIST model using Tensorflow 2.11 (see link below)

- made the model Quantization Aware (QA) using

tfmot.quantization.keras.quantize_model - trained the QA model a bit extra to adapt to INT8 precision

- quantized the model using Tensorflow Lite and saved to a

tflitefile.

The code for the steps above is available at the Tensorflow page for Quantization aware training and can be run in google colab. The network looks like this:

Now I want to recreate this model in TensorRT using the python API (C++ could be ok as well but python is easier).

The environment was prepared using TensorRT Support Matrix :

CUDA 11.7

CuDNN 8.4.1

TensorRT 8.4.3

python 3.8

pycuda 2022.2.2

Ubuntu 20.04 LTS

The TensorRT API allows creating models from code to set the weights and biases. I have extracted the weights and biases from the quantized tflite model using Netron and saved them as numpy arrays .npy:

weights are int8

biases are int32

scales are FP32

The model, weights and biases are available on google drive at this location.

The questions is:

How to make this model scale outputs from the layers back to int8 using scales from the quantized tflite model?

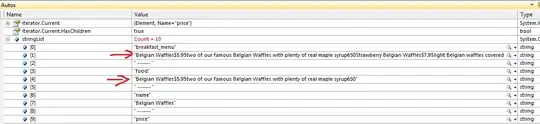

For example the output from the convolutional layer after performing operations in int8 will be accumulated into int32 or fp32. Then it needs to be scaled back to int8. It looks like there are 12 scales for the filters, 12 scales for biases, 1 scale for the input and 1 scale for the output.

Input scale:

filter scales:

bias scales:

output scales:

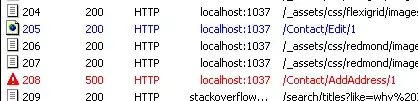

The model is already trained and the weights are already quantized - so I don't want to do any extra calibration in TensorRT - I also don't want to use the dynamic range API. I get the error:

[TRT] [E] 4: input_image: input/output with DataType Int8 in network without Q/DQ layers must have dynamic range set when no calibrator is used.

The model's architecture is as defined below - seems like Quantization and Dequantization (Q/DQ) layers are needed but I can't find any examples on how to do that. Can you please provide the code to make the tflite int8 model work in TensorRT? Possibly with Q/DQ layers.

I have set the weights here to 'ones' but in practice I load them from a .npy array.

def create_mnist(network, config):

compute_type = trt.int8

w_type = np.float32

b_type = np.float32

nr_channels = 1

nr_filters = 12

input_tensor = network.add_input(name="input_image", dtype=compute_type, shape=(nr_channels,28,28))

conv1_w = np.ones((nr_filters, nr_channels, 3, 3), dtype=w_type)

conv1_b = np.ones(12, dtype=b_type)

conv = network.add_convolution_nd(input=input_tensor, num_output_maps=nr_filters, kernel_shape=(3, 3), kernel=conv1_w, bias=conv1_b)

conv.precision = compute_type

conv.name = "conv_layer"

conv.stride_nd = (1, 1)

conv.set_output_type(0, compute_type)

conv_output = conv.get_output(0)

conv_output.name = "conv_output"

conv_output.dtype = compute_type

relu = network.add_activation(input=conv_output, type=trt.ActivationType.RELU)

relu.name = "relu"

relu.precision = trt.int8

relu.set_output_type(0, trt.int8)

relu_output = relu.get_output(0)

relu_output.name = "relu_output"

relu_output.dtype = compute_type

pooling = network.add_pooling_nd(input=relu_output, type=trt.PoolingType.MAX, window_size=(2,2))

pooling.name = "pooling"

pooling.set_output_type(0, trt.int8)

pooling.stride_nd = (2, 2)

pooling_output = pooling.get_output(0)

pooling_output.name = "pooling_output"

pooling_output.dtype = compute_type

dense_w = np.ones((10, 2028), dtype=w_type)

dense_b = np.ones((10), dtype=b_type)

dense = network.add_fully_connected(input=pooling_output, num_outputs=10, kernel=dense_w, bias=dense_b)

dense.name = "Flatten dense layer"

dense.set_output_type(0, trt.int8)

dense_output = dense.get_output(0)

dense_output.name = "digit_classification"

dense_output.dtype = compute_type

network.mark_output(tensor=dense_output)

network.name = "MNIST INT8 network"