I have a use case where I need to run the delta live table on a triggered mode and would like to know if we have any capabilities around checkpointing in triggered mode.

My source is a streaming one where data gets filled at second granularity and I would like to run a DLT pipeline on a triggered mode for every 24 hours and pull latest data from it.

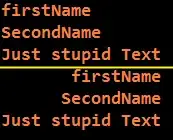

When i set the mode to streaming, i could see the checkpoints being created but couldn't find a way to set check points for triggered mode.

Can we have incremental load functionality in triggered mode ?