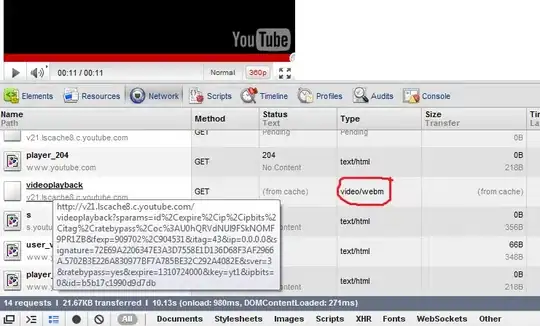

I need help with changing the lip color of a person in a video using Mediapipe. I've used Mediapipe for facial landmark detection and tracking, but I'm not sure how to proceed with changing the lip color. I couldn't find any resources on how to achieve this in the Mediapipe documentation.

This has to do more with OpenCV than Mediapipe. You might want to search for how to fill a polygon using cv2.fillPoly. You will need the landmarks to define the contour you can refer to this image here to find which landmarks.

I'm using Python and OpenCV. Running the code on Google Colab. I did try the method suggested by @fadiaburaid but the result was not up to the mark. The polygons seems to dance as the coords detected by Mediapipe were continuously changing and the polygons drawn on the image seemed visibly heterogenous. I tried feathering, but it didn't bring quality of results to an acceptable level.

Any suggestion to improve and stabilize the polygon blending are welcome!!

Face Cropping

from google.colab import output

from google.colab.patches import cv2_imshow

import cv2

import mediapipe as mp

# Load the MediaPipe Face Detection model

mp_face_detection = mp.solutions.face_detection

# Initialize the Face Detection model

face_detection = mp_face_detection.FaceDetection()

# Load the image

image = cv2.imread('/content/wallpaper.png')

# Convert the image to RGB

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

# Detect faces in the image

results = face_detection.process(image)

# Get the first detected face

face = results.detections[0]

# Get the bounding box of the face

x1 = int(face.location_data.relative_bounding_box.xmin * image.shape[1])

y1 = int(face.location_data.relative_bounding_box.ymin * image.shape[0])

x2 = int(x1 + face.location_data.relative_bounding_box.width * image.shape[1])

y2 = int(y1 + face.location_data.relative_bounding_box.height * image.shape[0])

# Calculate the size of the square bounding box

size = max(x2 - x1, y2 - y1)

# Calculate the center of the bounding box

center_x = (x1 + x2) // 2

center_y = (y1 + y2) // 2

# Calculate the coordinates of the square bounding box

x1_square = center_x - size // 2

y1_square = center_y - size // 2

x2_square = x1_square + size

y2_square= y1_square + size

# Crop and show square face region from original image

square_face_region=image[y1_square:y2_square,x1_square:x2_square]

resized_image=cv2.resize(square_face_region,(480,480))

resized_image_bgr = cv2.cvtColor(resized_image, cv2.COLOR_RGB2BGR)

# Save the image

cv2.imwrite('resized_image.jpg', resized_image_bgr)

Mask Generation

import itertools

import numpy as np

# Load the MediaPipe Face Mesh model

mp_face_mesh = mp.solutions.face_mesh

# Initialize the Face Mesh model

face_mesh = mp_face_mesh.FaceMesh( static_image_mode=True,refine_landmarks=True,min_detection_confidence=0.5)

image = resized_image_bgr

# Define the left eye landmark indices

# LIPS = list(set(itertools.chain(*mp_face_mesh.FACEMESH_LIPS)))

# upper = [409,405,375,321,314,267,269,270,291,146,181,185,91,84,61,37, 39, 40,0,17]

# lower = [402,415,312,311,310,308,324,318,317,178,191,80, 81, 82,87, 88,95,78,13, 14]

upper_new = [0,267,269,270,409,291,375,321,405,314,17,84,181,91,146,61,185,40,39,37]

lower_new = [13,312,311,310,415,308,324,318,402,317,14,87,178,88,95,78,191,80,81,82]

# Detect the face landmarks

results = face_mesh.process(image)

# Create an empty mask with the same shape as the image

mask_upper = np.zeros(image.shape[:2], dtype=np.uint8)

# Draw white polygons on the mask using the upper landmarks

for face_landmarks in results.multi_face_landmarks:

points_upper = []

for i in upper_new:

landmark = face_landmarks.landmark[i]

x = int(landmark.x * image.shape[1])

y = int(landmark.y * image.shape[0])

points_upper.append((x, y))

cv2.fillConvexPoly(mask_upper, np.int32(points_upper), 255)

# Create an empty mask with the same shape as the image

mask_lower = np.zeros(image.shape[:2], dtype=np.uint8)

# Draw white polygons on the mask using the lower landmarks

for face_landmarks in results.multi_face_landmarks:

points_lower = []

for i in lower_new:

landmark = face_landmarks.landmark[i]

x = int(landmark.x * image.shape[1])

y = int(landmark.y * image.shape[0])

points_lower.append((x, y))

cv2.fillPoly(mask_lower, np.int32([points_lower]), 255)

# Subtract the lower mask from the upper mask

mask_diff = cv2.subtract(mask_upper, mask_lower)

# Apply morphology operations to smooth mask

kernel=cv2.getStructuringElement(cv2.MORPH_ELLIPSE,(5 ,5))

mask_diff=cv2.morphologyEx(mask_diff,cv2.MORPH_OPEN,kernel)

mask_diff=cv2.morphologyEx(mask_diff,cv2.MORPH_CLOSE,kernel)

cv2_imshow(mask_diff)

Mask Blending

# Convert the mask to 3 channels

mask_diff_3ch = cv2.cvtColor(mask_diff, cv2.COLOR_GRAY2BGR)

image = cv2.imread('/content/resized_image.jpg')

# Apply the mask to the original image

masked_image = cv2.bitwise_and(image, mask_diff_3ch)

cv2_imshow(masked_image)

def create_colored_mask(hex_color, shape):

# Convert the hex color code to an RGB tuple

rgb_color = tuple(int(hex_color[i:i+2], 16) for i in (0, 2 ,4))

# Create a blank mask with the given shape

colored_mask = np.zeros(shape, dtype=np.uint8)

# Set the color channels according to the chosen RGB color

colored_mask[:,:,0] = rgb_color[2]

colored_mask[:,:,1] = rgb_color[1]

colored_mask[:,:,2] = rgb_color[0]

return colored_mask

# Create a 3-channel version of your mask_diff array

mask_diff_3ch = cv2.cvtColor(mask_diff,cv2.COLOR_GRAY2BGR)

# Ask the user to enter a hex color code for their mask

hex_color = input('Enter a hex color code for your mask (e.g. FF0000 for red): ')

# Create a colored mask with the chosen hex color and same shape as your original mask

colored_mask = create_colored_mask(hex_color, mask_diff_3ch.shape)

# Apply the colored mask where your original mask is True

masked_image = cv2.bitwise_and(colored_mask,colored_mask ,mask=mask_diff)

# Superimpose the colored mask on your original image

final_image = cv2.addWeighted(image, 1 , masked_image ,1 ,0)

cv2_imshow(final_image)

I'm getting following results from above code. But I want much higher quality result from both video or photo input.

Input Image: Input Image

Cropped Input Image: Cropped Input Image

Mask Image: Mask Image

Final Image: Final Image with Masking