I have a ROS bag file with markerArray markers that I am trying to draw on an image (so I can use as bounding boxes later on).

The data is for a trip from a vehicle (getting on the freeway ramp, driving around, then exiting the freeway).

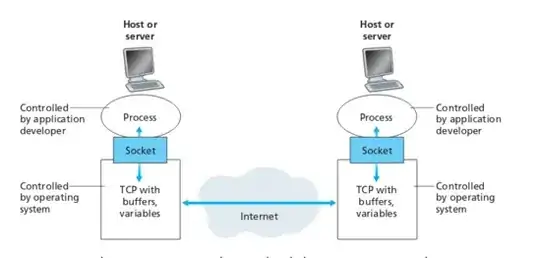

Here is what the markers look like in RViz (My vehicle is the middle cube with 3.40m):

I tried to draw the CUBE markers (red cubes) using OpenCV with the following code:

#include <rosbag/bag.h>

#include <rosbag/view.h>

#include <visualization_msgs/MarkerArray.h>

#include <opencv2/opencv.hpp>

#include <boost/filesystem.hpp>

int main(int argc, char** argv)

{

// Open the ROS bag file

rosbag::Bag bag;

bag.open("/home/myuser/001.bag", rosbag::bagmode::Read);

// Specify the topic that contains the marker array data

std::vector<std::string> topics;

topics.push_back("/dataTopic/objects_marker");

// Create a view of the ROS bag file containing the specified topic

rosbag::View view(bag, rosbag::TopicQuery(topics));

// Create a directory to store the image frames

std::string image_dir = "/home/myuser/ros_markers/image_frames";

if (!boost::filesystem::exists(image_dir))

{

boost::filesystem::create_directory(image_dir);

}

// Loop through the messages in the view

int frame_num = 0;

for (rosbag::MessageInstance const msg : view) {

// Convert the message to a marker array

visualization_msgs::MarkerArray::ConstPtr markers = msg.instantiate<visualization_msgs::MarkerArray>();

if (markers != NULL) {

// Create an empty image to draw the markers on

cv::Mat image = cv::Mat::zeros(480, 640, CV_8UC3);

// Loop through the markers in the array

for (int i = 0; i < markers->markers.size(); i++) {

// Get the marker properties

int id = markers->markers[i].id;

int type = markers->markers[i].type;

// Create a YUV422 color from the RGB values

cv::Scalar color(8, 8, 240, 1);

// Draw the marker on the image

if (type == visualization_msgs::Marker::CUBE) {

cv::Point3d position(markers->markers[i].pose.position.x, markers->markers[i].pose.position.y,

markers->markers[i].pose.position.z);

cv::Vec3d scale(markers->markers[i].scale.x, markers->markers[i].scale.y, markers->markers[i].scale.z);

cv::Matx33d rotation(markers->markers[i].pose.orientation.w, markers->markers[i].pose.orientation.z,

-markers->markers[i].pose.orientation.y,

-markers->markers[i].pose.orientation.z, markers->markers[i].pose.orientation.w,

markers->markers[i].pose.orientation.x,

markers->markers[i].pose.orientation.y, -markers->markers[i].pose.orientation.x,

markers->markers[i].pose.orientation.w);

cv::Vec3d half_scale = scale * 2;

// 8 points because Cube markers have 8 vertices

cv::Vec3d points[8] = {cv::Vec3d(half_scale[0], half_scale[1], half_scale[2]),

cv::Vec3d(-half_scale[0], half_scale[1], half_scale[2]),

cv::Vec3d(half_scale[0], -half_scale[1], half_scale[2]),

cv::Vec3d(-half_scale[0], -half_scale[1], half_scale[2]),

cv::Vec3d(half_scale[0], half_scale[1], -half_scale[2]),

cv::Vec3d(-half_scale[0], half_scale[1], -half_scale[2]),

cv::Vec3d(half_scale[0], -half_scale[1], -half_scale[2]),

cv::Vec3d(-half_scale[0], -half_scale[1], -half_scale[2])};

for (int j = 0; j < 8; j++) {

points[j] = rotation * points[j] + cv::Vec3d(position.x, position.y, position.z);

}

cv::Point image_points[8];

for (int j = 0; j < 8; j++) {

image_points[j] = cv::Point(points[j][0], points[j][1]);

}

cv::fillConvexPoly(image, image_points, 8, color);

}

}

// Write the image to a file

std::string image_path = image_dir + "/" + std::to_string(frame_num) + ".png";

cv::imwrite(image_path, image);

// Increment the frame number

frame_num++;

}

}

// Close the ROS bag file

bag.close();

return 0;

}

And this is what the drawn markers look like:

Now I understand that the issue might be the markers are recorded in real-world transform coordinates, therefore they are being drawn this way.

If I am drawing them correctly in my code, could someone tell if there is a way to draw them in camera transform coordinates (to view them from a front view from my vehicle looking ahead at the road)?

Thank you!

Edit:

Here is a data example of one of my markerArray markers:

header:

seq: 0

stamp: 1657906813.396054877

frame_id: vehicle_frame

ns: radar only

id: 44490

type: 1

action: 0

pose:

position:

x: 3.26551

y: 18.9191

z: 0.7

orientation:

x: 0

y: 0

z: -0.0441122

w: 0.999027

scale:

x: 1

y: 1

z: 1.4

color:

r: 1

g: 0

b: 0

a: 0.7