We would like to expose the data from delta table or synapse table to REST API service which is java based or either python language. kindly provide sample for java or python to kick start my new implementation.

Thanks

We would like to expose the data from delta table or synapse table to REST API service which is java based or either python language. kindly provide sample for java or python to kick start my new implementation.

Thanks

After reproducing from my end, I could get desired results by following either of the below mentioned ways.

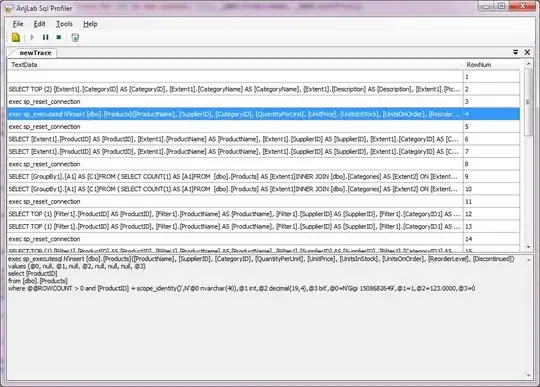

You can retrieve the delta table data using SQL statement through REST API. Below is the sample cod ethat was working for me.

import requests

my_json = {"statement": "select * from delta.`/FileStore/<YOUR_FILE_PATh>`", "warehouse_id": "<YOUR_WAREHOURSE_ID>"}

auth = {"Authorization": "Bearer <ACCESS_TOKEN>"}

response = requests.post('https://<DATABRICKS_WORKSPACE_URL>/api/2.0/sql/statements', json = my_json, headers=auth).json()

print(response['result']['data_array'])

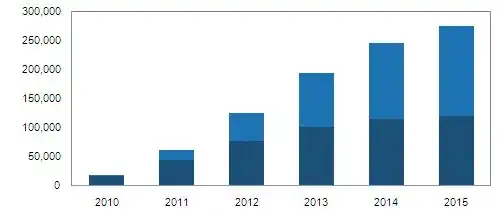

Results:

Using DBFS API I'm trying to list the files inside the folder and then appending each .parquet files' data inside dataframe where I'm storing the whole dataframe inside a CSV file. Below is the complete code that's working for me.

import requests

import base64

import tempfile

import pyarrow.parquet as pq

import pandas as pd

url = "https://<DATABRICKS_WORKSPACE_URL>/api/2.0/dbfs"

folder_path = "/FileStore/<YOUR_FOLDER_PATH>"

access_token = "<YOUR_ACCESS_TOKEN>"

headers = {"Authorization": f"Bearer {access_token}"}

params = {"path": folder_path}

# Lists folders in Databricks DBFS Folder

response = requests.get(url+'/list', headers=headers,params=params)

files = response.json()

final_df = None

for i in files['files'][1:]:

params = {"path":i['path']}

# Reads files individually

response = requests.get(url+'/read', headers=headers,params=params)

sample=base64.b64decode(response.json()['data'])

with tempfile.NamedTemporaryFile(delete=False) as f:

f.write(sample)

f.seek(0)

parquet_file = pq.ParquetFile(f.name)

df = parquet_file.read().to_pandas()

if(final_df is None):

final_df=df

else:

final_df = pd.concat([df, final_df])

final_df.to_csv("example.csv", index=False)

Results: