Describe the issue

I'm using the fluent-operator to deploy fluentdbit to collect logs and fluentd to process and send to an OpenSearch domain with advanced security configuration.

It works with open domains, but not with secured ones.

I noticed the Operator creates a Service Account for Fluentbit and Fluentd by default. I then proceeded to attach an IAM Role for Service Account(IRSA) to Fluentd's Service Account with the following inlinePolicy:

apiVersion: auth.XXX.XXX.com/v1

kind: IRSA

metadata:

name: fluent-test

namespace: fluent-system

annotations:

auth.XXX.XXX.com/serviceaccount: managed

spec:

serviceAccount: fluentd

nameOverride: fluent-test

path: /XXXX/

inlinePolicy: |

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": "es:*",

"Resource": [

"arn:aws:es:*:XXXX:domain/*"

]

}

]

}

But the Fluentd pod still can't communicate with the specified domain:

The client is unable to verify distribution due to security privileges on the server side. Some functionality may not be compatible if the server is running an unsupported product.

2023-03-15 09:27:50 +0000 [warn]: #0 [ClusterFluentdConfig-cluster-fluentd-config::cluster::clusteroutput::fluentd-output-opensearch-0] Could not communicate to OpenSearch, resetting connection and trying again. [401]

2023-03-15 09:27:50 +0000 [warn]: #0 [ClusterFluentdConfig-cluster-fluentd-config::cluster::clusteroutput::fluentd-output-opensearch-0] Remaining retry: 14. Retry to communicate after 2 second(s).

2023-03-15 09:27:54 +0000 [warn]: #0 [ClusterFluentdConfig-cluster-fluentd-config::cluster::clusteroutput::fluentd-output-opensearch-0] Could not communicate to OpenSearch, resetting connection and trying again. [401]

After applying the IRSA, its irsa-operator generates the equivalent role in AWS with the correct inlinePolicy and even mentions the OpenSearch Service as "Allowed Services". It also correctly attaches the IRSA to the fluentd service account in the EKS cluster.

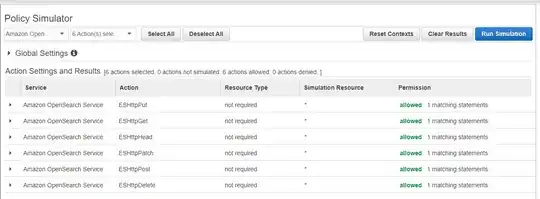

I've also used the IAM Policy Simulator, which seems to indicate my role/policy is correct:

I'm starting to wonder if it's possible at all to use IRSA to give fluentd access to a secured OpenSearch domain...

I noticed that fluentbit output plugin for opensearch has some parameters to deal with authentication and IAM roles, but fluentd's doesn't.

Is it an unavoidable limitation? Has anyone ever used the fluent-operator in a fluentbit-fluentd mode with fluentd using IRSA to connect to AWS OpenSearch?

To Reproduce

- Provision an OpenSearch domain with advanced security options using this Terraform provider.

I used the following inputs:

dedicated_master_enabled = "true"

dedicated_master_count = "3"

dedicated_master_type = "r5.large.search"

automated_snapshot_start_hour = "0"

domain_name = "any-name"

engine_version = "OpenSearch_2.3"

instance_type = "m5.large.search"

instance_count = 3

subnet_ids = [...]

volume_size = 50

vpc_id = your-vpc-id

default_zone_awareness_config = false

zone_awareness_enabled = true

create_iam_service_linked_role = "false"

encryption_enabled = "true"

enforce_https = "true"

node_to_node_encryption_enabled = "true"

retention_in_days = 7

warm_instance_enabled = "true"

warm_instance_type = "ultrawarm1.medium.search"

warm_instance_count = 2

cold_storage_enabled = "true"

sg_egress_all_enabled = "true"

sg_ingress_443_enabled = "true"

sg_ingress_9200_enabled = "true"

# Custom Endpoint

#custom_endpoint_fqdn = "your-custom-endpoint-fqdn"

#custom_endpoint_certificate_arn = ...

# SSO

advanced_security_enabled = "true"

anonymous_auth_enabled = "false"

master_user_arn = "..."

saml_master_user_name = "..."

saml_master_backend_role = "..."

internal_user_database_enabled = "false"

## Okta Integration

saml_enabled = "true"

saml_entity_id = "http://www.okta.com/XXXX"

saml_metadata_content = file("./saml-metadata.xml")

# COGNITO

/*

cognito_options_enabled = "true"

cognito_user_pool_id = "us-west-2_xxxx"

cognito_identity_pool_id = "..."

cognito_role_arn = "XXX"

*/

- Install the fluent-operator, fluentbit and fluentd as instructed in the

How did you install fluent operator?section below.

Expected behavior

- All fluent-operator, fluentbit and fluentd pods up and running.

- Fluentbit collecting logs and forwarding to fluentd.

- Fluentd shipping logs to a secured OpenSearch domain.

Your Environment

- Fluent Operator version: 2.0.1

- Container Runtime: Docker

- Operating system: Linux(Ubuntu)

- Kernel version: 5.4.0-135-generic

How did you install fluent operator?

I installed the operator via helm chart with fluentbit and fluentd disabled:

helm repo add fluent https://fluent.github.io/helm-charts

helm repo update

helm install fluent-operator fluent/fluent-operator --create-namespace -n fluent-system --version 2.0.2 --values values.yaml

My custom values.yaml had the following configuration:

containerRuntime: docker

Kubernetes: false

operator:

initcontainer:

repository: "docker"

tag: "20.10"

container:

repository: "kubesphere/fluent-operator"

tag: v2.0.1

resources:

limits:

cpu: 100m

memory: 60Mi

requests:

cpu: 100m

memory: 20Mi

logLevel: debug

fluentd:

enable: false

I then, applied fluentbit and fluentd manifests manually:

apiVersion: fluentbit.fluent.io/v1alpha2

kind: FluentBit

metadata:

labels:

app.kubernetes.io/name: fluent-bit

name: fluent-bit

namespace: fluent-system

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: node-role.kubernetes.io/edge

operator: DoesNotExist

fluentBitConfigName: fluent-bit-config

image: kubesphere/fluent-bit:v2.0.9

positionDB:

hostPath:

path: /var/lib/fluent-bit/

resources:

limits:

cpu: 500m

memory: 200Mi

requests:

cpu: 10m

memory: 25Mi

tolerations:

- operator: Exists

---

apiVersion: fluentbit.fluent.io/v1alpha2

kind: ClusterInput

metadata:

labels:

fluentbit.fluent.io/component: logging

fluentbit.fluent.io/enabled: "true"

name: docker

spec:

systemd:

db: /fluent-bit/tail/systemd.db

dbSync: Normal

path: /var/log/journal

systemdFilter:

- _SYSTEMD_UNIT=docker.service

- _SYSTEMD_UNIT=kubelet.service

tag: service.*

---

apiVersion: fluentbit.fluent.io/v1alpha2

kind: ClusterInput

metadata:

labels:

fluentbit.fluent.io/component: logging

fluentbit.fluent.io/enabled: "true"

name: tail

spec:

tail:

db: /fluent-bit/tail/pos.db

dbSync: Normal

memBufLimit: 5MB

parser: docker

path: /var/log/containers/*.log

refreshIntervalSeconds: 10

skipLongLines: true

tag: kube.*

---

apiVersion: fluentbit.fluent.io/v1alpha2

kind: ClusterFluentBitConfig

metadata:

labels:

app.kubernetes.io/name: fluent-bit

name: fluent-bit-config

spec:

filterSelector:

matchLabels:

fluentbit.fluent.io/enabled: "true"

inputSelector:

matchLabels:

fluentbit.fluent.io/enabled: "true"

outputSelector:

matchLabels:

fluentbit.fluent.io/enabled: "true"

service:

parsersFile: parsers.conf

---

apiVersion: fluentbit.fluent.io/v1alpha2

kind: ClusterFilter

metadata:

labels:

fluentbit.fluent.io/component: logging

fluentbit.fluent.io/enabled: "true"

name: kubernetes

spec:

filters:

- kubernetes:

annotations: true

kubeCAFile: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

kubeTokenFile: /var/run/secrets/kubernetes.io/serviceaccount/token

kubeURL: https://kubernetes.default.svc:443

labels: true

- nest:

addPrefix: kubernetes_

nestedUnder: kubernetes

operation: lift

- modify:

rules:

- remove: stream

- remove: kubernetes_pod_id

- remove: kubernetes_host

- remove: kubernetes_container_hash

- nest:

nestUnder: kubernetes

operation: nest

removePrefix: kubernetes_

wildcard:

- kubernetes_*

match: kube.*

---

apiVersion: fluentbit.fluent.io/v1alpha2

kind: ClusterOutput

metadata:

labels:

fluentbit.fluent.io/component: logging

fluentbit.fluent.io/enabled: "true"

name: fluentd

spec:

forward:

host: fluentd.fluent-system.svc

port: 24224

matchRegex: (?:kube|service)\.(.*)

apiVersion: fluentd.fluent.io/v1alpha1

kind: Fluentd

metadata:

name: fluentd

namespace: fluent-system

labels:

app.kubernetes.io/name: fluentd

spec:

globalInputs:

- forward:

bind: 0.0.0.0

port: 24224

replicas: 1

image: kubesphere/fluentd:v1.15.3

resources:

limits:

cpu: 500m

memory: 500Mi

requests:

cpu: 100m

memory: 128Mi

fluentdCfgSelector:

matchLabels:

config.fluentd.fluent.io/enabled: "true"

---

apiVersion: fluentd.fluent.io/v1alpha1

kind: ClusterFluentdConfig

metadata:

labels:

config.fluentd.fluent.io/enabled: "true"

name: fluentd-config

spec:

clusterFilterSelector:

matchLabels:

filter.fluentd.fluent.io/enabled: "true"

clusterOutputSelector:

matchLabels:

output.fluentd.fluent.io/enabled: "true"

watchedNamespaces: # find an easier way to do this or open an issue

- kube-system

- fluent-system

- default

---

apiVersion: fluentd.fluent.io/v1alpha1

kind: ClusterOutput

metadata:

labels:

output.fluentd.fluent.io/enabled: "true"

name: fluentd-output-opensearch

spec:

outputs:

- opensearch:

host: vpc-XXX-us-XXX-XXXX-XXXX.us-XXX-XXX.es.amazonaws.com

logstashFormat: true

logstashPrefix: logs

port: 443

scheme: https

logLevel: debug # change to info after OpenSearchErrorHandler is fixed